On October 17, 2022, a new vulnerability was disclosed in the Apache Airflow Hive Provider: CVE-2022-41131. This security issue is caused by *Improper Neutralization of Special Elements used in an OS Command ('OS Command Injection')*. In plain terms, attackers can run arbitrary system commands in the context of any Airflow task that uses the Hive Provider – even if they don’t have permission to directly edit DAG files.

Let’s break it down so you can understand the risk, see how the exploit works, and learn how to fix it.

Where’s the Problem?

The bug affects the Apache Airflow Hive Provider for versions before 4.1.. It also impacts any version of Airflow before 2.3. whenever the Hive Provider is installed. (Starting from Airflow 2.3., you must *manually* install Hive Provider 4.1. or above to be safe.)

The issue lies in how arguments are sent to shell commands in Hive hooks or operators. User-controlled input—such as values passed to a template field—was not properly sanitized before being added to commands. This lets attackers inject extra commands if they can influence those input fields.

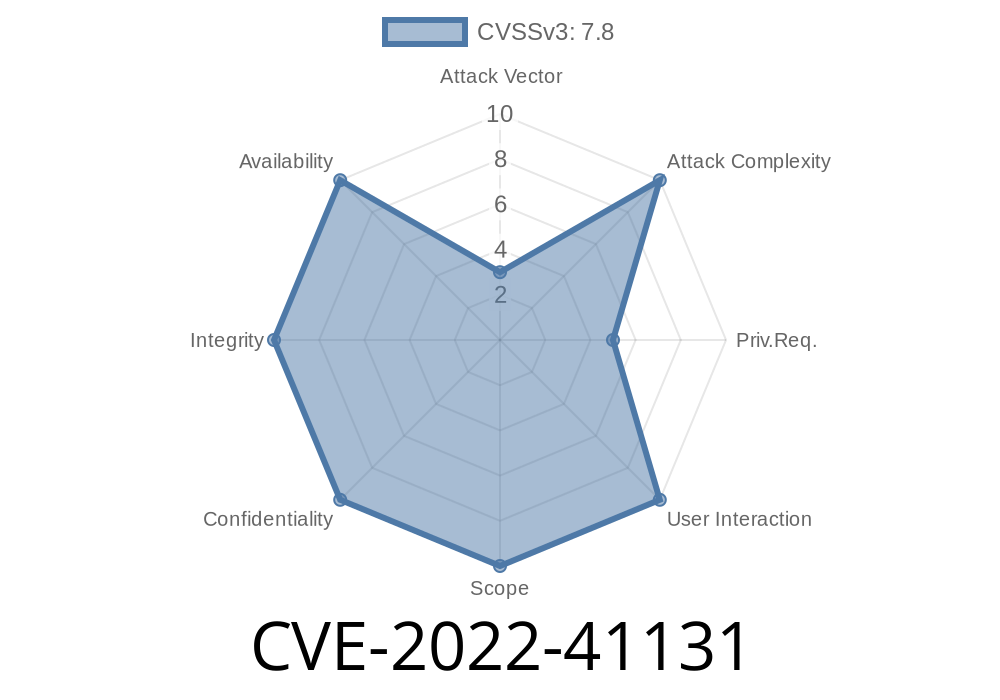

How Bad is It?

Pretty bad: an attacker can get a shell in the environment where Airflow tasks run. They do NOT need to upload or modify any DAG files! If they can trigger or control task parameters (e.g., via the Airflow UI or APIs, or via “params” in the webhooks), it’s game over.

It’s all about OS command injection. Here’s a simplified rundown

1. A user starts a HiveOperator or HiveHook task where a template parameter ends up being sent to a shell command.

2. If the value includes special characters (;, &&, |, etc.), those get included in the constructed shell command line.

The shell executes both the intended command and the attacker’s injected command.

For instance, if a template field (like hql or partition) isn’t sanitized and you set it to something like:

REAL_PARTITION; cat /etc/passwd

the resulting shell command might secretly run both the normal Hive command and cat /etc/passwd.

Example: Proof of Concept

Let's look at a simplified (dangerous) code example, based on the HiveServer2Hook logic seen in vulnerable Airflow Hive Provider code:

# This is not the actual code, but illustrates the risk

command = f"hive --hql {hql_param}"

os.system(command)

If a user passes input like

hql_param = "SELECT 1; cat /etc/passwd"

The system command becomes

hive --hql SELECT 1; cat /etc/passwd

Now, the attacker can run ANY command on your Airflow worker.

Upgrade is the only real answer.

- Upgrade your Hive Provider to at least 4.1. (see Hive Provider Release Page).

Upgrade your Apache Airflow to at least 2.3., if possible.

- Even after upgrading Airflow, you must manually install Hive Provider 4.1. or higher. The two upgrades are separate steps.

Update commands

pip install 'apache-airflow-providers-hive>=4.1.'

pip install --upgrade 'apache-airflow>=2.3.'

Note: Airflow versions before 2.3. will not accept Hive Provider 4.1.+ due to dependency compatibility. If you cannot upgrade Airflow, you must not use untrusted inputs in Hive tasks.

Remove (or restrict) access to trigger Hive workflows or edit Hive-related parameters.

- Limit Airflow UI/API access to trusted admins only.

References

- Apache Airflow Security Advisories

- CVE-2022-41131 at MITRE

- Hive Provider Changelog

- Apache Airflow Announcement

- Exploit Example at huntr.dev

TL;DR

CVE-2022-41131 is a serious command injection bug in the Apache Airflow Hive Provider. Attackers can execute OS commands via task parameters with no DAG file access. Update your Hive Provider (>= 4.1.) and Airflow (>= 2.3.) ASAP. If you can't update, stay aware of what user data goes into your Hive jobs, and restrict access tightly.

Timeline

Published on: 11/22/2022 10:15:00 UTC

Last modified on: 11/28/2022 17:50:00 UTC