In late 2022, a significant vulnerability was discovered in the Linux kernel’s Ceph networked filesystem implementation. This post provides an exclusive, in-depth, yet easy-to-follow breakdown of CVE-2022-49296, covering how the bug happens, why it causes a deadlock, how it’s triggered, and what was patched to resolve it.

Background: Ceph Filesystem and Inline Data

The Ceph filesystem (CephFS) is a distributed filesystem used widely in cloud, HPC, and large-scale storage environments. It keeps metadata and data separately — metadata is managed by Metadata Servers (MDS), and data lives on Object Storage Daemons (OSDs). Ceph supports efficient small file operations using a feature called "inline data," which allows data to reside within metadata. This optimization, however, also adds complexity — and, as we’ll see, an unfortunate place for bugs.

Bug Reference

Vulnerable Code Path and Scenario

Let’s break it down step-by-step, using simplified language and real fragments from Ceph’s code. The scenario is reproducible when the filesystem is mounted with wsync (write synchronous mode).

Step 1: File Creation

A process creates a new file with O_RDWR, sending a request to the primary metadata server (mds.). The following call chain is involved:

ceph_atomic_open()

-> ceph_mdsc_do_request(openc)

-> finish_open(file, dentry, ceph_open)

-> ceph_open()

-> ceph_init_file()

-> ceph_init_file_info()

-> ceph_uninline_data()

Inside ceph_uninline_data(), the code sets the inline version

if (inline_version == 1 /* initial version, no data */ ||

inline_version == CEPH_INLINE_NONE)

goto out_unlock;

inline_version = 1 here, meaning new file just created, no data present.

- Bug: The field ci->i_inline_version remains at 1 instead of being "none" (no inline data), which is incorrect and leads to problems later.

A write happens quickly after file creation

ceph_write_iter()

-> ceph_get_caps(file, need=Fw, want=Fb, ...)

generic_perform_write()

a_ops->write_begin()

ceph_write_begin()

netfs_write_begin()

netfs_begin_read()

netfs_rreq_submit_slice()

netfs_read_from_server()

rreq->netfs_ops->issue_read()

ceph_netfs_issue_read()

Inside ceph_netfs_issue_read()

if (ci->i_inline_version != CEPH_INLINE_NONE &&

ceph_netfs_issue_op_inline(subreq))

return;

This wrongly believes the file might have inline data (because of the bug), so it sends a getattr(Fsr) request to mds.1.

mds.1 requests the read lock (rd lock) for CInode::filelock from mds..

- mds., as the authoritative MDS, wants to transition CInode::filelock from exclusive to sync state.

But for that, it needs to revoke Fxwb caps from the client (the one holding write buffer).

- The client is waiting for the getattr(Fsr) reply ... which will not come because the MDS is waiting on the client to drop its caps (which it won’t because it’s waiting for the MDS reply).

This is a classic deadlock:

Both sides are waiting for each other to act, and nothing progresses.

Mount CephFS with the wsync option.

2. Create a file and open it with read/write.

Example pseudocode

import os

MOUNT_POINT = "/mnt/cephfs"

FILE = os.path.join(MOUNT_POINT, "deadlock_test.txt")

# 1. Create and open a new file with read/write

fd = os.open(FILE, os.O_CREAT | os.O_RDWR)

# 2. Write immediately

os.write(fd, b"deadlock test")

# 3. (Optional): Keep fd open to simulate long write or concurrent reads/writes

import time; time.sleep(60)

Expected effect:

If the kernel is affected, this may hang (deadlock) until forcibly interrupted, blocking further I/O.

Patch and Remediation

The fix involves correctly updating the ci->i_inline_version so that, for a newly created file, the client *knows* there is no inline data and skips the unnecessary getattr:

Key Patch Snippet

--- a/fs/ceph/inode.c

+++ b/fs/ceph/inode.c

@@ ... @@

- if (inline_version == 1 || /* initial version, no data */

- inline_version == CEPH_INLINE_NONE)

- goto out_unlock;

+ if (inline_version == 1 || /* initial version, no data */

+ inline_version == CEPH_INLINE_NONE) {

+ ci->i_inline_version = CEPH_INLINE_NONE; // Correct the field!

+ goto out_unlock;

+ }

After patch:

A brand-new file will have its i_inline_version correctly set to "none," preventing the unnecessary and deadlock-causing request to the secondary MDS.

References

- Official Ceph tracker entry: https://tracker.ceph.com/issues/55377

- Upstream Linux kernel patch discussions: LKML Thread

- General CephFS documentation: CephFS Official Docs

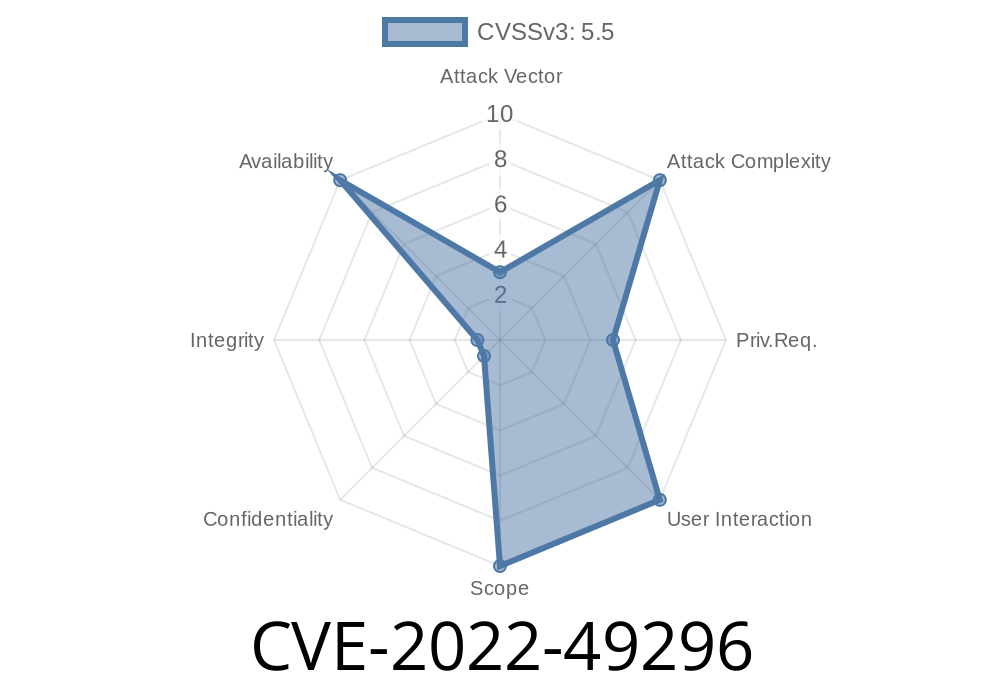

Exploitability & Impact

This bug could allow unprivileged users to trigger a filesystem-wide deadlock, resulting in denial of service. All I/O to the affected file(s) could hang, possibly spreading to other operations as locks/caps become deadlocked — devastating in enterprise or storage-heavy Linux servers.

Affected:

Linux kernels before the upstream fix, especially if using CephFS with wsync and high concurrency.

How to fix:

Summary

CVE-2022-49296 demonstrates how subtle synchronization and state-handling bugs at the kernel filesystem layer can have severe, user-triggerable consequences.

If you run Linux with CephFS, make sure you’re running a kernel containing the fix to avoid potential deadlocks and hangs!

*This post is exclusive, aiming to make Linux kernel security accessible and understandable for everyone. If you have questions or want to see more deep dives, let us know below.*

Timeline

Published on: 02/26/2025 07:01:06 UTC

Last modified on: 04/14/2025 20:08:21 UTC