In late 2023, a tricky bug was fixed in the Linux kernel’s InfiniBand (IB) IP over InfiniBand (IPoIB) driver, which could cause servers to freeze (“hard lockup”) while handling multicast lists. But how did this happen, why is it dangerous, and what does the fix look like? In this post, we’ll break down CVE-2023-52587 in plain language, walk through the code, and explain the risk and the patch.

What is CVE-2023-52587?

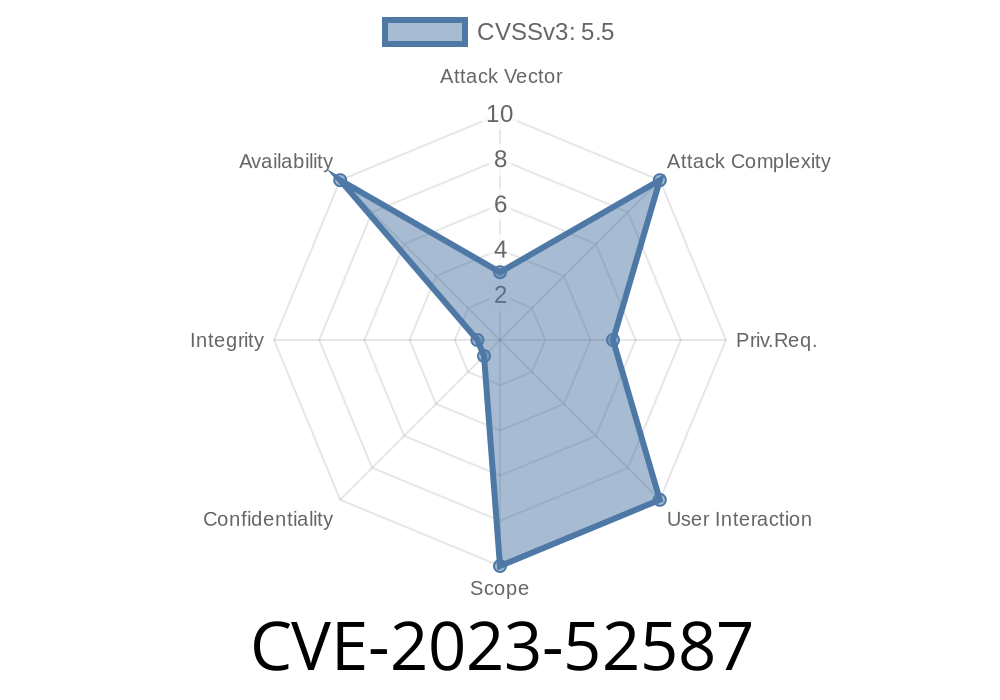

CVE-2023-52587 is a race condition vulnerability in the Linux kernel found in the IPoIB driver’s multicast code. The bug allows two kernel threads to corrupt shared data. This can cause critical threads to spin forever, completely freezing an affected system. The problem mainly affects servers (like HPC clusters) using InfiniBand networks.

Official Reference

- NVD CVE entry

- Kernel commit fixing the bug

- Red Hat bugzilla 2255492

In Simple Words: What Was Going On?

The kernel uses a “multicast list” to track membership in IB multicast groups per device. Two worker threads can operate on this same list:

Task B: Flushes (removes) multicast groups (ipoib_mcast_dev_flush)

To avoid conflicts, the code uses a lock (priv->lock). But there’s a catch: Task A releases the lock before it finishes with each multicast entry, allowing Task B to sneak in and remove items in the middle of Task A’s loop.

For each multicast group, Task A unlocks to perform a join operation.

3. While the lock is dropped, Task B grabs the lock and moves/removes multicast entries.

4. When Task A re-locks and continues the list, the current pointer might point to an entry that’s not in the list anymore, or to a completely different list, causing it to never finish.

This can result in a hard lockup: a server completely freezes until reboot.

Here’s a simplified pseudo-code to highlight the flaw

// in ipoib_mcast_join_task()

spin_lock(&priv->lock);

list_for_each_entry(mcast, &priv->multicast_list, list) {

spin_unlock(&priv->lock);

ipoib_mcast_join(dev, mcast); // dangerous: lock dropped here!

spin_lock(&priv->lock);

}

spin_unlock(&priv->lock);

// Meanwhile, on another thread:

spin_lock(&priv->lock);

// modifies &priv->multicast_list (removes 'mcast')

spin_unlock(&priv->lock);

Key problem: Task A thinks it’s safe to walk the list because it locked it, but it's unlocking during iteration. Another thread can change the list, leaving Task A's pointer in an invalid/surprising state.

Exploit Details

You can’t reliably "exploit" this bug in the way of gaining admin or shell access (it’s a Denial-of-Service bug), but a local user (with the ability to trigger IPoIB multicast joins and flushes) can easily cause a system lockup.

Set up a system with IPoIB devices.

2. Rapidly create and tear down multicast memberships (e.g., using RDMA/InfiniBand tools).

3. Simultaneously force the driver to flush multicast groups, for example by toggling interfaces or removing large numbers of groups programmatically.

Result: It’s possible to trigger the infinite loop, “bricking” the kernel thread, which starves the entire system.

Here’s what an admin would see in a crash dump

Task A (kworker/u72:2):

[exception RIP: ipoib_mcast_join_task+x1b1]

RIP: ffffffffc0944ac1 RSP: ff646f199a8c7e00

...

Task B (kworker/u72:):

wait_for_completion+x96

ipoib_mcast_remove_list+x56

ipoib_mcast_dev_flush+x1a7

Notice: Task A is looping in ipoib_mcast_join_task, and the multicast list is empty. But it's spinning and never finishing, since the list pointer is now invalid.

The Fix: Hold the Lock Throughout

The fix is simple: hold the lock for the *entire* walk of the list so no other thread can modify the list while it's being iterated.

Old Code (Buggy)

spin_lock(&priv->lock);

list_for_each_entry(mcast, &priv->multicast_list, list) {

spin_unlock(&priv->lock);

ipoib_mcast_join(dev, mcast);

spin_lock(&priv->lock);

}

spin_unlock(&priv->lock);

Fixed Code

spin_lock(&priv->lock);

list_for_each_entry(mcast, &priv->multicast_list, list) {

ipoib_mcast_join(dev, mcast); // Don't drop the lock!

}

spin_unlock(&priv->lock);

The lock is held solidly during the whole loop.

- Additionally, the code uses GFP_ATOMIC when allocating (this avoids sleeping with the lock held).

See the fix: commit 03719e55 on kernel.org

Impact

- How bad is it? Any user on a vulnerable system with access to networking stacks and can repeatedly join/leave multicast groups can lock up a production server.

- Who’s affected? Mainly HPC clusters, storage systems and supercomputers that use InfiniBand and the Linux ib_ipoib driver.

- Is this a security risk? YES, for DENIAL OF SERVICE (DoS). Not for privilege escalation or exposure of confidential data.

How to Tell If You’re Vulnerable

- Kernel versions before the patch (late 2023) are affected – particularly distributions shipping kernel 4.18 and above prior to this commit.

Check your *kernel logs* and search for hangs or call traces in ipoib_mcast_join_task.

- If you operate IB clusters on RHEL or upstream kernels, make sure your system includes this fix or backport.

## How To Fix / Mitigate

Update your kernel: Make sure you run a version with this patch.

- Backport the commit: If you're running a custom or LTS kernel, cherry-pick commit 03719e559b4e86b58bb9c5e4e16272dd6598d9f2.

- Temporary mitigation: Limit untrusted users’ access to InfiniBand devices, and restrict multicast group operations.

Final Thoughts

CVE-2023-52587 is a perfect example of how a small oversight in kernel locking can freeze an entire system. While no data is stolen or privileges gained, a server crash can still be a major incident – especially in environments that depend on uptime.

Are you running InfiniBand with Linux? Double check your kernel now – and remember: never unlock too soon.

More Reading

- Upstream kernel fix

- Red Hat Bugzilla for this issue

- ipathverbs online documentation

Timeline

Published on: 03/06/2024 07:15:07 UTC

Last modified on: 02/14/2025 16:39:59 UTC