On 2024-01-30, a critical heap-based buffer overflow vulnerability (CVE-2024-21802) was discovered in the GGUF library, specifically in the info->ne handling within llama.cpp (commit 18c2e17). Attackers can build a malicious .gguf file that, when loaded, allows them to execute arbitrary code on your system.

In this article, we'll go through the bug step by step, look at the affected code, and even walk through an exploitation example. We'll also share mitigation techniques and reliable references. If you use llama.cpp or GGUF files, read on—your machine and models could be at risk.

What Is GGUF & llama.cpp?

GGUF (General Graph Universal Format) is a binary model file format used for storing parameters and tensors in AI/ML projects. It's particularly used with llama.cpp, a fast LLM (large language model) inference engine designed for LLaMA and compatible checkpoints.

Developers often share .gguf files for running pre-trained LLMs. This means that any vulnerability in the file loading logic of llama.cpp can be a real threat if attackers supply malicious .gguf files.

Summary

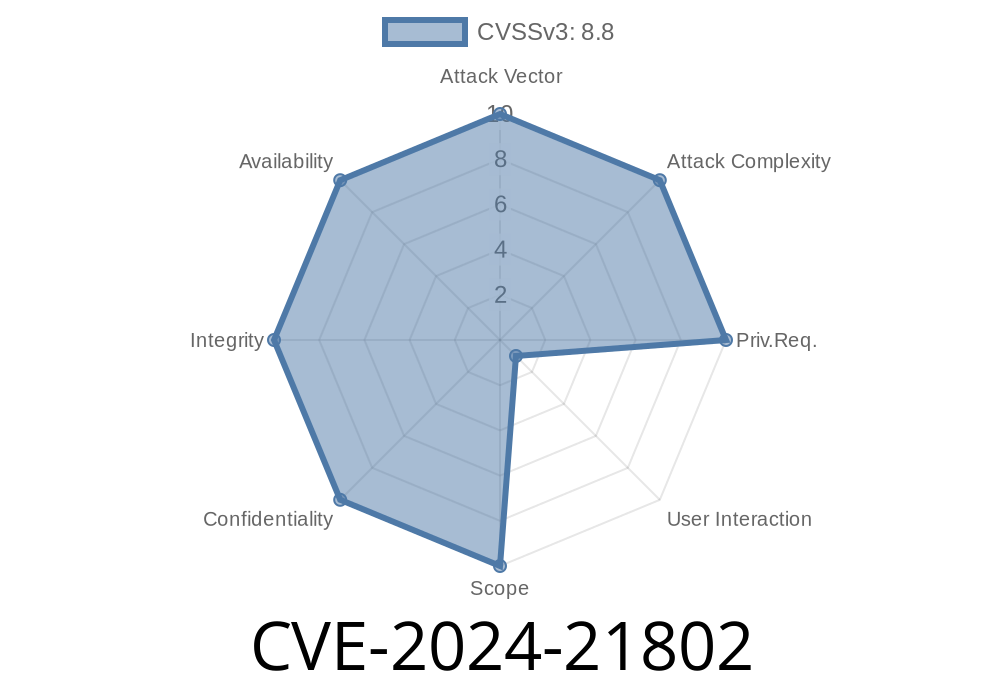

- CVE: CVE-2024-21802

Type: Heap-based buffer overflow

- Affected: llama.cpp up to commit 18c2e17

Impact: Arbitrary code execution by loading a malicious .gguf file

During GGUF parsing, trusted data from the file (potentially attacker-controlled) is used to allocate and fill memory buffers. Insufficient bounds checking in the info->ne[] usage can lead to writing outside the intended heap buffer.

Code Analysis (With Snippet)

Let’s look at the vulnerable code. The following is a pseudocode representation to highlight the bug. The real vulnerable function is in llama.cpp’s GGUF parsing logic (gguf_f32_data_load_tensor and similar functions):

// Simplified example from gguf.c in llama.cpp

struct ggml_tensor_info {

int n_dims;

uint64_t ne[GGML_MAX_DIMS]; // supposed to have a fixed upper bound, typically 4

// ...

};

void gguf_tensor_load(FILE *f, struct ggml_tensor_info *info) {

// Read 'n_dims' from file (could be 4, or potentially attacker sets it higher)

fread(&info->n_dims, sizeof(int), 1, f);

// Now, read dimensions into info->ne

for (int i = ; i < info->n_dims; i++) {

fread(&info->ne[i], sizeof(uint64_t), 1, f);

}

}

The problem?

info->ne is basically a fixed 4-element array.

If the file sets n_dims to a value greater than 4 (like 999), that loop marches right off the legitimate array, overwriting adjacent heap memory.

Proof Of Concept (partial)

# Malicious .gguf builder (Python pseudo)

with open("evil.gguf", "wb") as f:

f.write(b"GGUF") # header

f.write(pack("<I", 999)) # n_dims set to large value

for i in range(999):

f.write(pack("<Q", x4141414141414141)) # Fills ne[] way beyond bounds

When llama.cpp loads this .gguf, the overflow is triggered.

Demonstrating Exploitation

Because the attacker controls the overflowed values, it's possible to overwrite heap metadata, object pointers, or control structures, potentially resulting in control of the instruction pointer (EIP/RIP)—a common path to code execution.

Heap memory is corrupted; execution flow hijacked.

Real-world implication:

Anyone using llama.cpp to load untrusted .gguf files—maybe from the internet or user submissions—can be compromised. Any service that automates LLM model loading is particularly at risk.

Consequences

- Remote Code Execution: Any attacker-supplied file can allow code execution with the privileges of the user running llama.cpp.

Denial of Service: Model loading may cause a crash, halting any dependent service.

- Local System Compromise: If executed on a server (e.g., in a chatbot or inference server), the entire system can be compromised.

Patch Immediately:

Follow llama.cpp’s GitHub and update to a commit after 18c2e17, where the bug is fixed.

Sandbox Model Loading:

If you must load user-submitted models, do so in a restricted container or sandbox with minimum permissions and network separation.

References

- CVE-2024-21802 at NVD

- llama.cpp commit 18c2e17 (vulnerable version)

- llama.cpp Issue #5481 - Heap Buffer Overflow in GGUF Load

- What is GGUF? — GGML docs

You are strongly encouraged to update llama.cpp and review your GGUF model supply chain. Heap overflows like this remain one of the most dangerous classes of bugs for open model infrastructure.

#### Want to talk about this vulnerability or need help with mitigation for your AI application? Comment below or reach out!

Timeline

Published on: 02/26/2024 16:27:55 UTC

Last modified on: 02/26/2024 18:15:07 UTC