On February 27, 2024, Apache disclosed CVE-2024-26308, a serious vulnerability in the popular Apache Commons Compress library. This vulnerability concerns "Allocation of Resources Without Limits or Throttling": an attacker can craft malicious archives that make applications using Commons Compress allocate an excessive amount of memory, potentially leading to denial-of-service (DoS) situations.

In this post, I’ll break down what CVE-2024-26308 is, demonstrate how it works with code snippets, and explain how to fix and prevent this exploit in your Java projects.

What is Apache Commons Compress?

Apache Commons Compress is a Java library that lets developers read and write various archive and compression formats like ZIP, TAR, and 7z. It’s used in thousands of Java applications and frameworks, from servers to build tools.

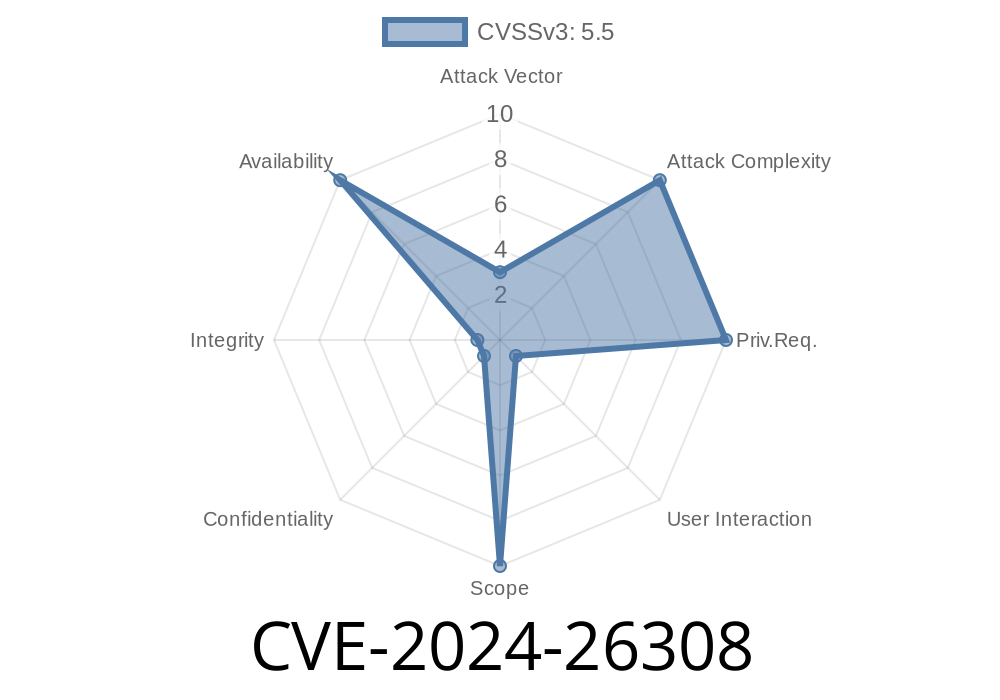

Understanding CVE-2024-26308

Affecting versions: 1.21 up to (but not including) 1.26

Vulnerability:

The library allocates memory and resources for archive entries without limits or throttling. A specially-crafted archive can trick Commons Compress into using too much memory or CPU. This can freeze or crash your Java app, or even the whole server.

Severity: High, due to the possibility of easy Denial of Service.

Exploit Details

When Commons Compress extracts archives (like TARs or ZIPs), it trusts the metadata about file size and directory structures. Malicious archives can claim extremely large file or directory sizes without actually containing that much data. Under the hood, the library tries to process these as-is — leading to massive allocations or endless loops, depending on the format.

This means

- A bad actor can upload or provide a zip/tar file.

The application processes it (maybe for unarchiving user uploads, file previews, etc).

- The app tries to allocate an enormous amount of memory or inodes for files the archive claims to have, but which may not even exist.

Suppose you have a Java service that unpacks user-supplied tar files

import org.apache.commons.compress.archivers.tar.TarArchiveInputStream;

import java.io.*;

public class UnpackTar {

public void unpack(File tarFile, File destDir) throws IOException {

try (InputStream fi = new FileInputStream(tarFile);

TarArchiveInputStream tis = new TarArchiveInputStream(fi)) {

// NO limits or throttling here!

TarArchiveEntry entry;

while ((entry = tis.getNextTarEntry()) != null) {

File out = new File(destDir, entry.getName());

if (entry.isDirectory()) {

out.mkdirs();

} else {

try (OutputStream outStream = new FileOutputStream(out)) {

byte[] buffer = new byte[4096];

int len;

while ((len = tis.read(buffer)) > ) {

outStream.write(buffer, , len);

}

}

}

}

}

}

}

Problem:

If someone gives you a TAR file with an entry claiming to be a multi-terabyte file (or a directory with millions of files), the above code naively tries to unpack it all. Even if your real uploaded tar is only a few kilobytes, the app can try to allocate gigabytes of memory or hundreds of thousands of output files.

Denial of Service: Your JVM crashes with OutOfMemoryError or the disk or inodes fill up.

- Cloud Costs: On cloud providers that meter resources, such as AWS Lambda or Azure Functions, a simple crafted archive can run up your bill or burn through quotas.

- Attack Surface: If you accept user archives — think CI systems, online file converters, backup tools — you are at risk.

How the Patch Fixes It

Version 1.26 of Apache Commons Compress adds resource throttles:

Limits on how many entries can be unpacked

- Better handling of broken/malicious metadata

From the official change log:

> Starting with 1.26, you can set maximum limits and input sizes for archive readers.

Maven

<dependency>

<groupId>org.apache.commons</groupId>

<artifactId>commons-compress</artifactId>

<version>1.26</version>

</dependency>

Gradle

implementation 'org.apache.commons:commons-compress:1.26'

Example (with checks)

public void safeUnpack(File tarFile, File destDir, long maxFileSize, int maxEntries) throws IOException {

try (InputStream fi = new FileInputStream(tarFile);

TarArchiveInputStream tis = new TarArchiveInputStream(fi)) {

TarArchiveEntry entry;

int entryCount = ;

while ((entry = tis.getNextTarEntry()) != null) {

if (++entryCount > maxEntries) {

throw new IOException("Archive too many entries");

}

if (entry.getSize() > maxFileSize) {

throw new IOException("File too large: " + entry.getName());

}

// ...proceed with extract (as above)

}

}

}

References and Further Reading

- Apache Security Advisory (original)

- Commons Compress CVE-2024-26308 Security Page

- NVD Entry for CVE-2024-26308

- GitHub pull request fixing the issue

Conclusion

CVE-2024-26308 is a textbook example of why you must not “trust the input” when dealing with user-supplied files. If you use Apache Commons Compress 1.21–1.25, you should immediately upgrade to 1.26 or later and review your code to avoid unbounded resource allocations during archive extraction.

If you want your Java servers and apps to stay up and secure, keep your libraries updated — and never trust archive metadata.

Stay safe, and keep that resource usage sane!

If you have questions or want a code review, feel free to reach out.

Timeline

Published on: 02/19/2024 09:15:38 UTC

Last modified on: 02/22/2024 15:21:36 UTC