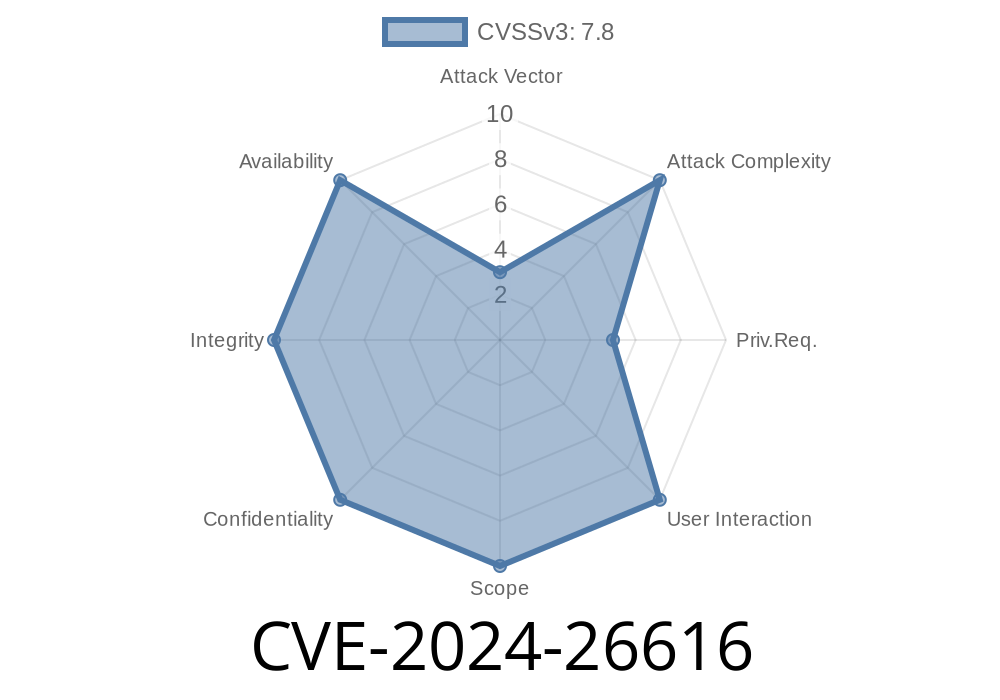

On Linux systems using the Btrfs filesystem, a subtle but serious vulnerability (tracked as CVE-2024-26616) was found in the way the scrub operation handles disk chunks not aligned to the typical 64K boundary. Originally reported when scrubbing an ext4-converted Btrfs partition, the bug can lead to unrecoverable data errors, kernel crashes, and most notably, a use-after-free memory error exploitable from user space.

In this breakdown, we’ll walk through what caused the problem, how it can be exploited, the patch, and illustrate the issue with relevant kernel code. If you run Btrfs on Linux, especially on hardware using converted ext filesystems, you’ll want to read closely.

What’s Affected

- Vulnerable software: Linux kernel Btrfs code before commit c88f271c6c5d (merge in 6.7+).

- Attack surface: Any nonaligned chunk (not modulo 64K) created by fs conversion or edge-case tools, then scrubbed.

- Attack method: Triggering scrub on a vulnerable filesystem; possible for unprivileged users if they can create snapshots.

- Impact: Kernel use-after-free, slab corruption, OOPS, crash, or potential privilege escalation depending on what’s freed and reused.

What is "scrub"?

The Btrfs scrub operation is a disk check/repair process that reads data blocks to verify checksums and health, and possibly fix errors. It submits disk I/O ("bio"s) to specific ranges. Typically, Btrfs divides data in 64K-aligned chunks; scrub code assumes that alignment for I/O splits.

On a converted filesystem, you can get a chunk like

item 2 key (FIRST_CHUNK_TREE CHUNK_ITEM 2214658048) itemoff 16025 itemsize 80

length 86016 owner 2 stripe_len 65536 type DATA|single

Here, length = 86016 bytes (not a multiple of 64K = 65536). For logical address 2214744064

2214658048 + 86016 = 2214744064

This is the *end* of the chunk. The kernel’s btrfs_submit_bio() will split the bio (block I/O object) at the boundary for device mapping, causing two endio callbacks – one for each split.

PROBLEM: The scrub code in scrub_submit_initial_read() thinks it’ll get one endio, and frees memory (bbio::bio and its bio_vec) after the first one. The second callback then tries to use this freed memory – a classic use-after-free.

Example Crash Log

BUG: KASAN: slab-use-after-free in __blk_rq_map_sg+x18f/x7c

Read of size 8 at addr ffff8881013c904 by task btrfs/909

...

Call Trace:

__blk_rq_map_sg+x18f/x7c

virtblk_prep_rq.isra.+x215/x6a [virtio_blk ...]

flush_scrub_stripes+x38e/x430 [btrfs ...]

scrub_stripe+x82a/xae [btrfs ...]

scrub_chunk+x178/x200 [btrfs ...]

scrub_enumerate_chunks+x4bc/xa30 [btrfs ...]

btrfs_scrub_dev+x398/x810 [btrfs ...]

btrfs_ioctl+x4b9/x302 [btrfs ...]

---

Exploitability Details

- Anyone with the ability to initiate a scrub on vulnerable filesystems (e.g., users with snapshot tools or through automation scripts).

By crafting non-64K chunks (via filesystem conversion), user can trigger a scrub operation.

- Kernel memory after the bio is freed can be referenced by the second (unexpected) endio call: this may read garbage, cause OOPS, crash the kernel, or (with heap manipulation) potentially allow code execution.

Practical notes

- Exploitability is arch- and kernel-config-dependent, but likely possible to OOPS/crash reliably.

- Code exec would need a way to control the reallocated slab or fake bio/bvec objects.

Vulnerable Code (simplified)

/* scrub_submit_initial_read() code in fs/btrfs/scrub.c */

bio = bio_alloc(...);

// ... fill bio up to chunk length, expected 64k

submit_bio(bio); // submits I/O to block layer

// Later, endio callback is called (scrub_read_endio)

static void scrub_read_endio(struct bio *bio) {

scrub_bio = bio->bi_private;

// ... process completion, assume only one callback

kfree(scrub_bio);

}

Problem Scenario

- Kernel splits I/O into (e.g.) 4096 + 45056 byte bios at chunk end if not 64K-aligned.

Fixes two things

- scrub_read_endio() now only modifies bits within the chunk boundary, even if more I/O is returned.

- scrub_submit_initial_read() only submits requests within the real chunk size, sector-by-sector, instead of relying on simple length logic.

Code Snippet (Post-patch)

static void scrub_read_endio(struct bio *bio)

{

// Only update status within actual range of chunk,

// skip anything beyond last valid sector

}

static int scrub_submit_initial_read(..., u64 chunk_len, ...)

{

// Calculate number of sectors up to chunk_len

// Loop sector-by-sector, building up bio only for valid range

...

}

See the actual patch on kernel.org.

Here’s a pseudo-code how a PoC could look (for educational purposes)

# As root or with CAP_SYS_ADMIN

modprobe btrfs

# Create ext4, fill, convert to Btrfs (to get odd chunk lengths)

mkfs.ext4 /dev/vdb

mount /dev/vdb /mnt/test

fallocate -l 80K /mnt/test/file

umount /mnt/test

btrfs-convert /dev/vdb

# Now mount as Btrfs and run scrub

mount -t btrfs /dev/vdb /mnt/test

btrfs scrub start -B /mnt/test # Watch dmesg for KASAN/BUG

If unpatched, this can trigger a use-after-free and crash the kernel.

Official References and Further Reading

- Linux Kernel Patch (c88f271c6c5dcb1)

- Btrfs mailing list report

- NVD CVE-2024-26616 Entry

Conclusion

If you run Btrfs, especially on converted filesystems, upgrade to a kernel with this fix immediately. The Btrfs team fixed a tricky race on how non-standard chunk lengths interact with I/O callbacks — resulting in dangerous use-after-free errors. So, keep your kernel up-to-date, avoid running scrub on untrusted filesystems if you can't, and watch kernel/dmesg logs for suspicious Btrfs errors after conversion.

Stay Safe!

*Original analysis based on commit messages and mailing list reports. Please share or credit if you use this explanation. For questions, see the Btrfs wiki or drop by the Linux kernel mailing lists.*

Timeline

Published on: 03/11/2024 18:15:19 UTC

Last modified on: 12/12/2024 15:31:18 UTC