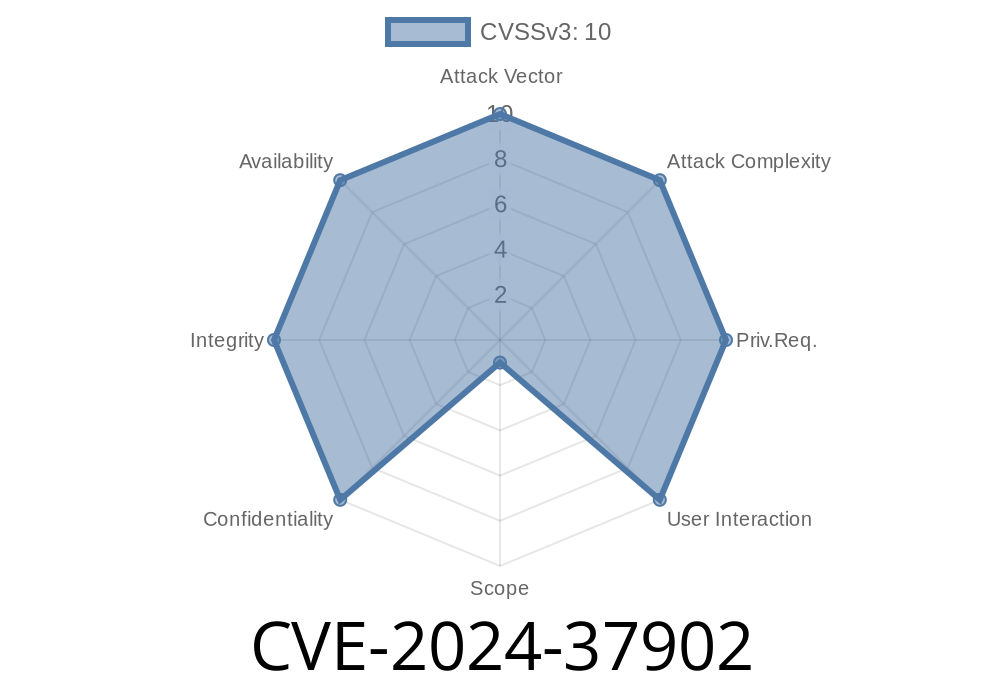

A serious vulnerability, CVE-2024-37902, was discovered in the DeepJavaLibrary (DJL), a widely-used deep learning framework for Java. DJL supports multiple deep learning engines and is used in production systems to serve machine learning models at scale.

Versions affected: .1. through .27.

Patched in: DJL .28. and DJL Large Model Inference containers .27.

If you use any DJL version between .1. and .27., your system may be at risk of arbitrary file overwrite, potentially leading to system compromise.

What’s the Issue?

The vulnerability allows a maliciously crafted archive (like a .tar, .zip, or similar), containing files with absolute file paths, to be processed by DJL code without proper validation. When such an archive is extracted (for example, during model loading), any file inside can overwrite any location on your system—even critical files like /etc/passwd or C:\Windows\System32\drivers\etc\hosts.

How Does the Exploit Work?

When DJL loads models, it often extracts archived artifacts. If an archive contains a file with an absolute path, the vulnerable DJL versions will place the file exactly at that path on your filesystem.

Let's look at a simplified code snippet from the vulnerable version

// Vulnerable DJL code (pseudocode)

try (TarArchiveInputStream tin = new TarArchiveInputStream(archiveInputStream)) {

TarArchiveEntry entry;

while ((entry = tin.getNextTarEntry()) != null) {

File outFile = new File(entry.getName()); // UNVALIDATED PATH!

FileUtils.copyInputStreamToFile(tin, outFile);

}

}

What’s happening?

Without checking if it’s an absolute path, it writes to that location.

- An attacker’s archive entry like /etc/shadow or C:\Windows\system32\evil.dll will *overwrite that file* when processed.

Real-World Exploitation Scenario

Suppose you have a DJL-based application that receives model files from users (or even from questionable servers):

1. Attacker crafts a malicious model archive, embedding files with system-critical paths (e.g., /root/.ssh/authorized_keys).

2. You load the model in DJL: the library unpacks the archive and *blindly* writes files to those paths.

3. Result: attacker’s code, keys, or configuration files overwrite your originals, possibly leading to code execution, data theft, or denial of service.

Step 1: Create a malicious tar archive

mkdir payload

echo "hacked!" > payload/etc-passwd

tar -cf evil_model.tar --transform 's|^|/|' payload/etc-passwd

The archive contains /etc-passwd as a file path.

Step 2: DJL code loads the archive

// In vulnerable DJL versions:

Criteria<Model, Object> criteria = Criteria.builder()

.setTypes(Model.class, Object.class)

.optModelUrls("path/to/evil_model.tar")

.build();

ZooModel<Model, Object> model = criteria.loadModel(); // Triggers extraction

Outcome:

After extraction, /etc/passwd on your server is replaced with the attacker’s content.

How Is This Fixed?

Starting in DJL .28. (and DJL Large Model Inference containers .27.), any absolute or path-traversal archive entry is skipped or sanitized. Here’s a patched approach:

String entryName = entry.getName();

if (entryName.startsWith("/") || entryName.contains("..")) {

// skip or sanitize

continue;

}

File outFile = new File(safeBase, entryName); // always stays within a safe directory

What Should You Do?

1. Upgrade immediately:

- DJL .28. release notes

For containers, use DJL LMI container v.27. or later.

2. Audit your code:

Scan logs and storage for unusual overwritten files if you were using older DJL versions.

3. Follow best practices:

Never run ML inference as root

- Use a dedicated, locked-down folder for model extraction/loading

References & Further Reading

- Official DJL Security Advisory - CVE-2024-37902

- CVE Record at NIST NVD

- Safe Extraction Patterns for Archives

- DJL Documentation

Summary

CVE-2024-37902 lets attackers overwrite arbitrary files on your system if you load malicious archives in DJL versions .1. to .27.. This bug is severe and easy to exploit. Upgrade now to DJL .28.+ and audit your systems if you may have been exposed.

*Stay safe, keep your dependencies up to date, and always treat model files with suspicion!*

Timeline

Published on: 06/17/2024 20:15:14 UTC

Last modified on: 06/20/2024 12:44:22 UTC