llama.cpp is a widely used project for running large language models (LLMs) offline on regular hardware, powered by fast C and C++ code. Its core goal is making advanced LLM inference accessible to everyone.

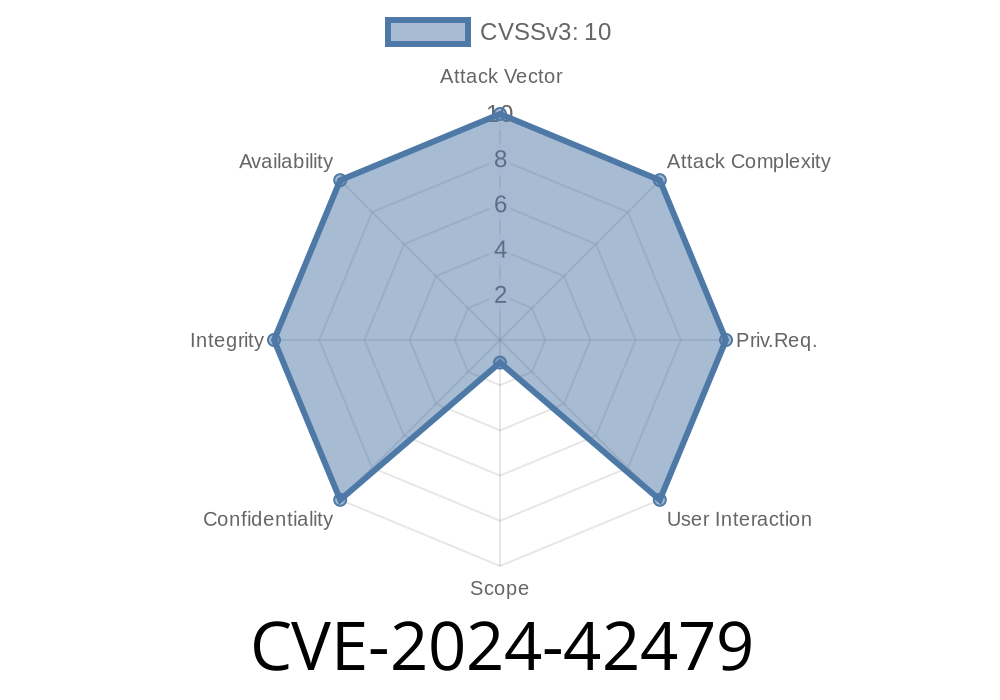

But a recently discovered vulnerability, CVE-2024-42479, exposes a critical risk in how llama.cpp handles memory inside its internal structure, rpc_tensor. This flaw allows attackers to overwrite arbitrary memory addresses, possibly leading to remote code execution or Denial of Service (DoS).

Let’s break down the vulnerability, see how it works, and look at ways to protect your applications. All code here is for educational purposes only.

Impact: Remote code execution or crashing the service.

- Fixed in: Commit b3561.

Here’s a simplified version of the struct from llama.cpp

typedef struct {

void * data;

// ... more members ...

} rpc_tensor;

Elsewhere, this pointer is used directly for writing, as in

// In actual code, 'tensor' is an rpc_tensor pointer controlled by external input

memcpy(tensor->data, src_buf, size);

Problem: There are no checks to ensure tensor->data really points to a buffer the code owns. If an attacker can control this pointer (directly, or via deserialization of user-supplied data), they can make it point anywhere in the process’s address space.

Exploit Scenario

If the attacker can send a specially crafted RPC request or serialized object, they might overwrite tensor->data with an address like xdeadbeef (or something meaningful like the address of a function pointer). Then, when the server calls memcpy, it writes user-controlled data there.

This is arbitrary address write: the holy grail for attacking native code.

Suppose you control the deserialization of a model’s weights or inference request

// Malicious input sets tensor->data to a target address

rpc_tensor * tensor = get_tensor_from_request(payload);

unsigned char evil_payload[] = "\xAA\xBB\xCC\xDD"; // Whatever the attacker wants

size_t size = 4;

memcpy(tensor->data, evil_payload, size); // This writes directly to attacker-chosen memory

If you point tensor->data to a function pointer, GOT entry, or sensitive data, you can overwrite it and maybe hijack execution.

Denial of Service: Crash the process by overwriting critical structures.

This is especially dangerous for any llama.cpp deployments offering an API, chatbot backend, or service accepting untrusted input.

Official Fix

The maintainers fixed this in commit b3561. The fix ensures that data is properly checked or set to known safe buffers before any writes take place.

Here’s a sketch of a safe pattern

rpc_tensor * tensor = /* obtain tensor safely */;

if (!is_valid_data_pointer(tensor->data)) {

// Reject or sanitize input

return ERROR;

}

memcpy(tensor->data, src_buf, size);

Recommendation:

Update your llama.cpp to *at least* b3561 or later. Never deserialize untrusted data unless you’re sure you’re patched.

Links

- llama.cpp repository

- CVE description (Mitre) *(if available)*

Conclusion

Memory safety in C/C++ projects running critical workloads (like LLM inference) is a must. CVE-2024-42479 in llama.cpp highlights why pointer checks are crucial. If you’re using llama.cpp, make sure you’re on a safe release!

For more technical details, follow the official fix diff, read the CVE record, and always keep your environments up to date.

Timeline

Published on: 08/12/2024 15:15:21 UTC

Last modified on: 08/15/2024 14:03:53 UTC