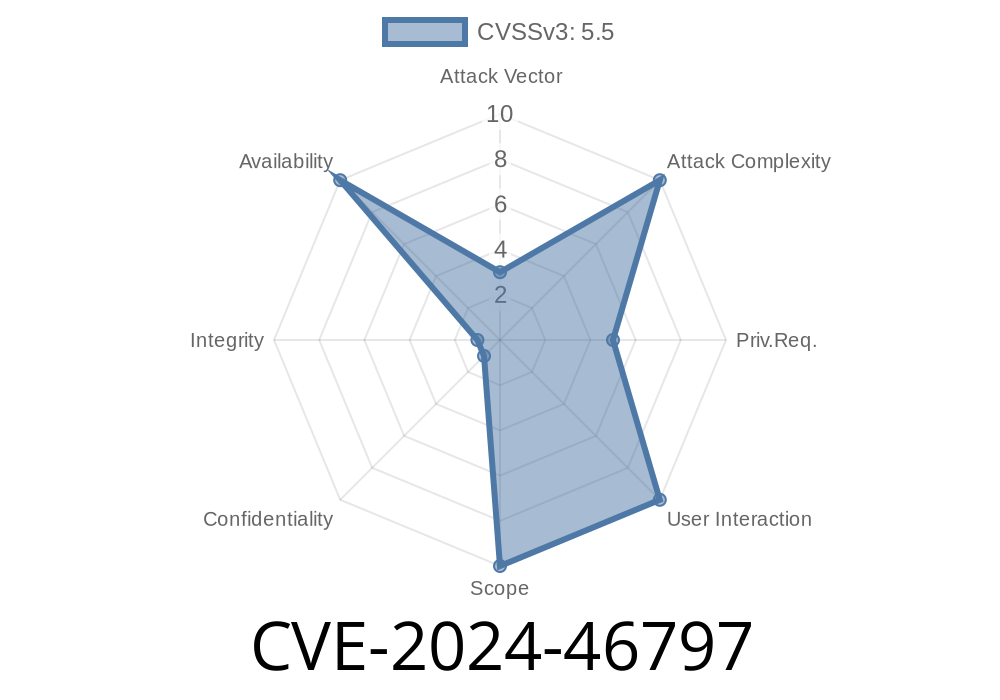

On Linux PowerPC systems, a subtle but critical kernel bug (CVE-2024-46797) was found and fixed in the implementation of qspinlock's MCS queue. This vulnerability could cause rare but severe deadlocks on multi-core systems, especially under heavy load or with stress-testing tools. In this post, we break down what happened, why it could deadlock your system, and how the fix works. Along the way, we’ll see the relevant code and show how the bug could be exploited to hang a Linux system.

TL;DR: If you run Linux on PowerPC, and rely on fine-grained spinlocks, make sure your kernel is patched against CVE-2024-46797!

What Is the QSpinlock MCS Queue?

qspinlock is a kernel implementation of a *queued* spinlock, helping many CPUs take turns holding a lock with better fairness and less cache-line bouncing compared to simple spinlocks. The MCS queue part makes sure each CPU waits in a queue for the lock, by building a linked list of waiting CPUs.

Then, it *should* assign lock B to that node’s lock field (nodes[id].lock = B).

But if an interrupt occurs after the counter is incremented but *before* the node’s lock is set, and if that interrupt handler tries to spin on another lock, things go wrong.

Why? Another CPU could now see "stale" data in the queue, and could even write to the wrong node’s "next" pointer, which breaks the queue logic. That leaves the CPU at the head of the queue waiting forever.

Let’s zoom into the problematic region in simplified code

// Buggy sequence:

unsigned int id = qnodesp->count++;

// <-- INTERRUPT lands here! -->

qnodes[id].lock = lockptr;

If another CPU reads qnodes[id].lock before it’s properly set, it can confuse which node is the real queue entry. Then, it may update the wrong "next" pointer.

Reproducing the Deadlock

You can stress your system like the kernel devs did using the stress-ng tool:

stress-ng --all 128 --vm-bytes 80% --aggressive \

--maximize --oomable --verify --syslog \

--metrics --times --timeout 5m

On affected systems (such as a 16-core PowerPC LPAR), you might see a hard lockup, traced to something like this:

watchdog: CPU 15 Hard LOCKUP

NIP [c000000000b78f4] queued_spin_lock_slowpath+x1184/x149

LR [c000000001037c5c] _raw_spin_lock+x6c/x90

Call Trace:

_raw_spin_lock+x6c/x90

raw_spin_rq_lock_nested.part.135+x4c/xd

sched_ttwu_pending+x60/x1f

...

This is a typical symptom of two threads spinning forever in the kernel, waiting for a "next" pointer that will never be set.

Let’s visualize the key events in a simplified diagram

CPU CPU1

spin_lock_irqsave(A)

spin_unlock_irqrestore(A)

spin_lock(B)

|

id = qnodesp->count++

| // <-- INTERRUPT fires here before nodes[].lock = B

spin_lock_irqsave(A) (via interrupt)

id = qnodesp->count++

nodes[1].lock = A

// Now, some CPUs confuse which node owns which lock,

// leading to wrong queueing and deadlock.

Can this bug be exploited for privilege escalation?

Generally, this is a *denial-of-service* issue. An attacker with code execution in kernel context (or even a user context via triggering problematic syscalls like futexes under load) can lock up the system by provoking the race window. This means any unprivileged user could freeze the box on vulnerable kernels if they run crafted workloads.

While no remote exploit has been demonstrated, local DoS is very realistic. If untrusted users have access, you must patch!

Here’s a sample C code snippet (not a full PoC, but sketching the idea)

// This sketch attempts to force the race

void *lock_thread(void *arg) {

spinlock_t *lock = (spinlock_t *)arg;

while (keep_running) {

spin_lock(lock); // Could hit the race window in kernel

spin_unlock(lock);

usleep(rand() % 100);

}

return NULL;

}

Imagine running dozens of these on many CPU cores, as stress-ng does internally.

The Fix

The Linux kernel patch (full diff here) ensures the node’s "lock" field is always initialized before incrementing the count, closing the window where an interrupt could find incomplete data.

Fixed code illustration

// Correct: Set node->lock before increasing count

qnodes[id].lock = lockptr; // Set the lock owner first!

qnodesp->count++; // Now mark the node used

This keeps the queue structure consistent, even if an interrupt lands anywhere.

References & Further Reading

- CVE-2024-46797 at NVD

- Linux Kernel Patch Commit

- stress-ng stress tool

- Linux kernel qspinlock documentation

Conclusion

CVE-2024-46797 shows how a tiny race in lock bookkeeping could bring a whole PowerPC system to a halt under load. While rare, this flaw is easy to trigger on big systems and can be abused for complete local denial-of-service.

The fix is simple—always fully initialize node structures before making them visible to other CPUs. All mainline and stable kernels have adopted the patch.

Bottom line: If you’re on PowerPC and run heavy workloads, make sure you’re up to date!

*If you need more technical info, the Linux Kernel Mailing List thread about the patch is equally enlightening.*

Timeline

Published on: 09/18/2024 08:15:06 UTC

Last modified on: 09/20/2024 18:18:18 UTC