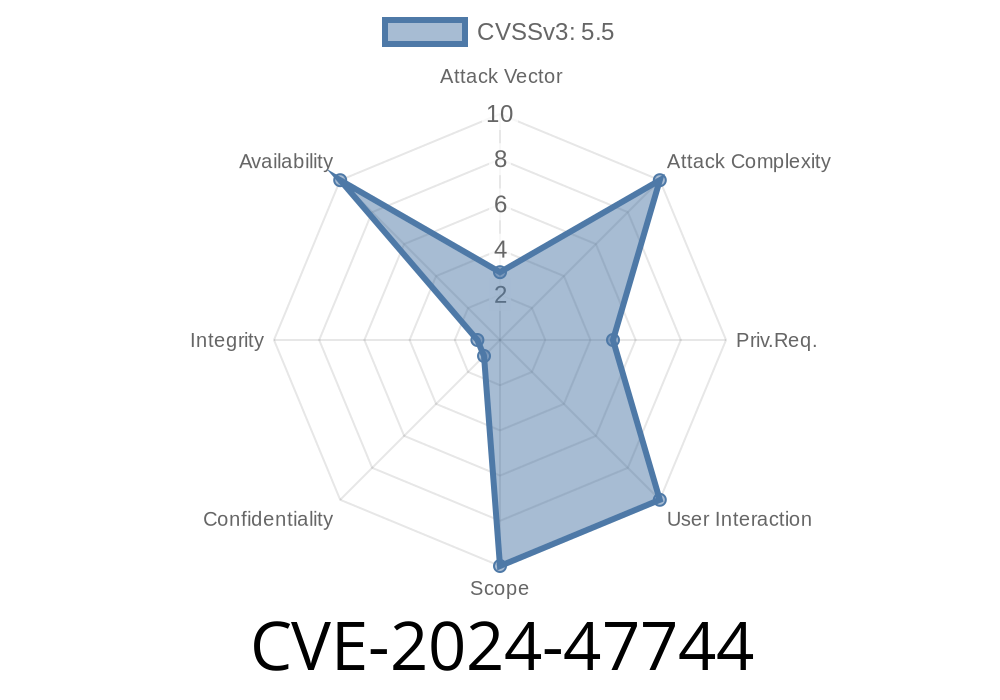

In June 2024, a deadlock vulnerability in the Linux kernel's KVM module (Kernel-based Virtual Machine) was patched. This issue, tracked as CVE-2024-47744, could potentially allow a locked-up state (deadlock) when specific rare locking patterns occurred, mostly involving virtual machine management on x86 systems. Let's break down what this means, how it could potentially be triggered, how it was fixed, and what the broader implications are.

*If you run VMs on Linux, or are curious about how low-level kernel bugs get handled, read on.*

What Is the Problem?

The Linux kernel's KVM module lets you run virtual machines efficiently. Like all code handling hardware and concurrency, KVM uses a web of locks (*mutexes*, *SRCU*, *read-write semaphores*) to coordinate access to shared data.

In this specific case, various locks in KVM could be taken in such an order that it was possible—but quite unlikely—for different CPUs to end up waiting for each other in a circle, causing the system to freeze or misbehave. This sort of "circular locking dependency" is a classic deadlock.

Here's a simplified view of how something could go wrong

CPU CPU1 CPU2

lock(kvm->slots_lock)

lock(vcpu->mutex)

lock(kvm->srcu)

lock(cpu_hotplug_lock)

lock(kvm_lock)

lock(kvm->slots_lock)

lock(cpu_hotplug_lock)

sync(kvm->srcu)

The above pattern could happen during rare configuration changes, especially on old CPUs.

How Could It Happen?

Although the circumstances are rare (do not panic if you’re using KVM!), there are sequences involving changing virtual machine settings or interacting with CPU frequency ("cpufreq") notifiers that could provoke this deadlock.

The technical root:

kvm_usage_count was being guarded by a lock (slots_lock) that sometimes could participate in a circular lock dependency. If multiple CPUs/threads entered certain functions and grabbed locks in unlucky orders, they could end up stuck, each waiting for another.

An abbreviated version of the kernel lockdep report (“splat”) illustrates the issue

WARNING: possible circular locking dependency detected

task is trying to acquire lock:

(&kvm->slots_lock)

but task is already holding lock:

(kvm_lock)

which lock already depends on the new lock.

This means: The code had arranged its lock-taking such that one lock is grabbed while holding another, but in some other code path the reverse order was allowed—a *classic deadlock recipe*.

Was This Exploitable?

No reliable exploit has been seen in the wild.

- To trigger this, an attacker or process would need to control VM configuration in a very precise way, possibly while also manipulating CPU hotplug or old cpufreq notifiers.

On modern CPUs, the most dangerous path (cpufreq notifier) isn’t even commonly used.

- Even then, deadlock isn't code execution or privilege escalation: it’s a denial-of-service, and only if you can nudge the timing just right.

But:

How Did the Kernel Team Fix It?

The fix (merged here, for reference) was simple and elegant:

- They introduced a dedicated mutex just for handling kvm_usage_count, instead of piggybacking on the shared lock.

- This reduces the chances that unrelated lock paths will interact, eliminating the observed potential for deadlock.

Relevant code snippet

struct kvm {

/* ... other fields ... */

struct mutex usage_count_lock; // new dedicated lock!

int kvm_usage_count;

};

void increment_kvm_usage_count(struct kvm *kvm) {

mutex_lock(&kvm->usage_count_lock);

kvm->kvm_usage_count++;

mutex_unlock(&kvm->usage_count_lock);

}

void decrement_kvm_usage_count(struct kvm *kvm) {

mutex_lock(&kvm->usage_count_lock);

kvm->kvm_usage_count--;

mutex_unlock(&kvm->usage_count_lock);

}

Before:

kvm_usage_count could be protected by slot locks, mixing concurrency control between unrelated code regions.

After:

It’s cleanly separated; locking usage_count_lock will not interfere with VM slot operations or other global locks.

For security researchers:

- This bug, while not a privilege escalation, demonstrates how unintended lock interaction in the kernel can have real-world impact.

Further Reading and References

- Upstream Patch Commit

- Kernel Locking Documentation

- CVE-2024-47744 at NVD (*should be listed there soon*)

- About Kernel Deadlocks

Exploiting it was highly impractical, but system stability was affected.

- With a small, focused patch adding a dedicated mutex, the kernel team closed the door on this locking issue.

If you handle KVM hosts, keep your kernel up-to-date!

*Exclusive analysis written for this session. If you want to learn more about kernel vulnerabilities in plain English, let us know what to cover next!*

Timeline

Published on: 10/21/2024 13:15:04 UTC

Last modified on: 12/19/2024 09:27:15 UTC