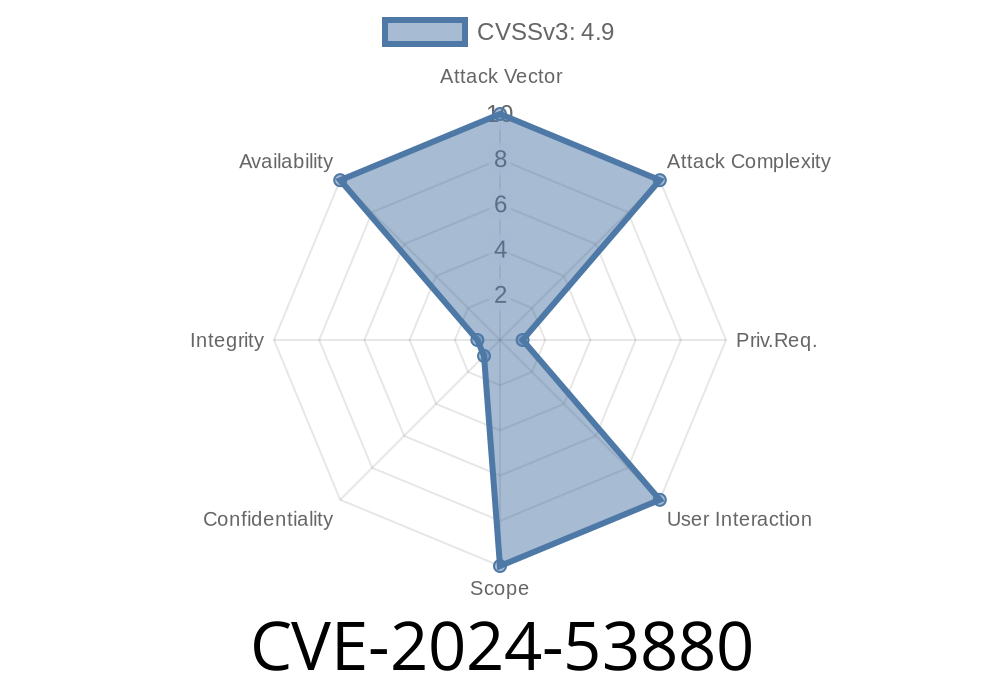

NVIDIA Triton Inference Server is widely used for deploying machine learning models at scale. Security researchers recently discovered a serious vulnerability tracked as CVE-2024-53880. This flaw lurks in the server's model loading API, where improper checks may allow a malicious actor to cause an integer overflow. With this exploit, an attacker could force a denial of service (DoS) on the server by giving it a model with a huge file size. In this article, we’ll walk through how CVE-2024-53880 works, show a code snippet to illustrate the bug, and provide key references.

What Is NVidia Triton Inference Server?

Before diving into the exploit, let’s quickly explain what the Triton Inference Server does. Developed by NVIDIA, this open-source software helps you serve and manage AI/ML models across CPUs and GPUs. You give it a model, and it responds to inference requests from web or client apps. Due to its popularity, security issues like CVE-2024-53880 can have a wide impact.

Understanding CVE-2024-53880

The vulnerability lies in how Triton processes the size of uploaded model files. If a user loads a model with an extremely large file size, an integer variable used for size calculation may wrap around or overflow. This can disrupt memory management and crash the server.

How Integer Overflow Happens

In C/C++ (the language used by Triton), integers have fixed maximum values. If you add one too many to, say, a 32-bit number, it wraps around to zero or a negative number. That's called integer overflow. Malicious actors can use this to fool programs into thinking a huge file is actually small, causing memory errors, server crashes, or even allowing further exploitation.

Below is a simplified C++-style pseudocode that shows how such a bug can happen

// Vulnerable snippet: model_loader.cpp (hypothetical)

size_t model_size = get_file_size(model_path);

// Let's say model_size is read from a file header that an attacker can modify

size_t buffer = malloc(model_size);

if (!buffer) {

// Handle memory allocation error

}

fread(buffer, 1, model_size, model_file);

If model_size is set to a huge value (for instance, xFFFFFFFF), the total size may wrap to zero or a small number due to how the system allocates memory. The server could either try to allocate zero bytes or fail unpredictably. If memory allocation succeeds but the actual file is way larger, fread will cause a crash or segmentation fault.

How an Attacker Can Exploit CVE-2024-53880

1. Craft a fake model file with a massive file size in its header or metadata (bigger than what a 32- or 64-bit integer can hold).

When the server tries to allocate memory, the overflowed variable results in a too-small allocation.

4. Subsequent operations read or write out of the allocated buffer, causing a crash or denial of service.

What’s the Impact?

A successful exploit leads to a Denial of Service. The server will likely crash or become unstable, stopping it from serving model inference requests. For businesses using Triton in production, this could disrupt AI-powered applications until the server is restarted and the malicious model is deleted.

Fixes and Recommendations

- Upgrade Immediately: NVIDIA has released patches for Triton Inference Server. Update to the latest version as soon as possible.

- Sanitize Inputs: Ensure model file sizes and metadata are strictly validated. Don’t rely on user-controlled data for resource management.

Official References

- NVIDIA Security Bulletin - June 2024 (CVE-2024-53880 listing and patch info)

- National Vulnerability Database: CVE-2024-53880

- Triton Inference Server GitHub

Conclusion

CVE-2024-53880 is a reminder that even robust AI infrastructure software like Triton Inference Server can have low-level bugs with real consequences. By understanding how integer overflows work and applying immediate security patches, users can prevent attackers from crashing their inference servers.

Timeline

Published on: 02/12/2025 01:15:08 UTC