A critical race condition was recently patched in the Linux kernel’s net/smc subsystem, now assigned CVE-2024-56718. This vulnerability could lead to use-after-free scenarios and kernel panics when handling SMC (Shared Memory Communications) link downs. In this article, we’ll walk through what this bug means, where the danger is, how the kernel crash happens, and how it was fixed, all in clear, simple language.

What’s the Problem?

The SMC subsystem in Linux handles special, high-performance network communication. It uses "links" managed by a structure called lgr (link group reference). When a network link goes down, a "work" job may be scheduled for processing later on a CPU worker thread.

Bug:

It was possible for the link-down work to be scheduled *before* the group was freed, but the work itself could run *after* that group was already gone (freed), causing the kernel to access invalid memory. Such use-after-free is very dangerous—and in kernel land, causes panics or can be abused for privilege escalation.

Here’s what the kernel crash looked like in real life

list_del corruption. prev->next should be ffffb638c9cfe20, but was 000000000000000

------------[ cut here ]------------

kernel BUG at lib/list_debug.c:51!

invalid opcode: 000 [#1] SMP NOPTI

CPU: 6 PID: 978112 Comm: kworker/6:119

...

Call Trace:

rwsem_down_write_slowpath+x17e/x470

smc_link_down_work+x3c/x60 [smc]

process_one_work+x1ac/x350

worker_thread+x49/x2f

? rescuer_thread+x360/x360

kthread+x118/x140

? __kthread_bind_mask+x60/x60

ret_from_fork+x1f/x30

What’s going on?

The kernel is trying to remove an item from a linked list, but the list pointer is already invalid. That’s a textbook use-after-free in a linked list—a classic bug that can be triggered by an attacker with enough control.

Technical Root Cause

The problematic code (before the fix) essentially scheduled a "link down" work item but didn't make sure the lgr group it was referencing was still alive when that work would run.

Pseudocode style snippet

// This is what happened (simplified):

schedule_work(&lgr->link_down_work);

// later, lgr might get freed BEFORE the work runs!

When the work ran, it touched a lgr pointer that was now pointing to freed memory—kaboom.

The Exploit Potential

A clever attacker (often from local privilege, like just being any user on the server) could potentially:

Race the system:

- Trigger SMC links to be created/destroyed in quick succession.

Potential kernel panic (DoS).

- Or, if attacker manages to rig the memory layout, get code execution as root (the golden ticket in kernel exploitation).

How was this fixed?

The solution used a reference counting pattern. Before scheduling the work, it holds a reference ("grab"), and after the work is finished or canceled, it drops ("put") the reference.

In code (simplified)

// Before scheduling work:

get_lgr_ref(lgr); // increment reference counter

schedule_work(&lgr->link_down_work);

// In the work function:

void smc_link_down_work(struct work_struct *work) {

... do work ...

put_lgr_ref(lgr); // decrement counter. Freed ONLY when last reference drops!

}

This way, the lgr memory is always alive for the duration of any scheduled work.

Upstream Linux kernel commit:

net/smc: protect link down work from execute after lgr freed (kernel.org)

SMC subsystem documentation:

SMC Protocol in Linux Kernel docs

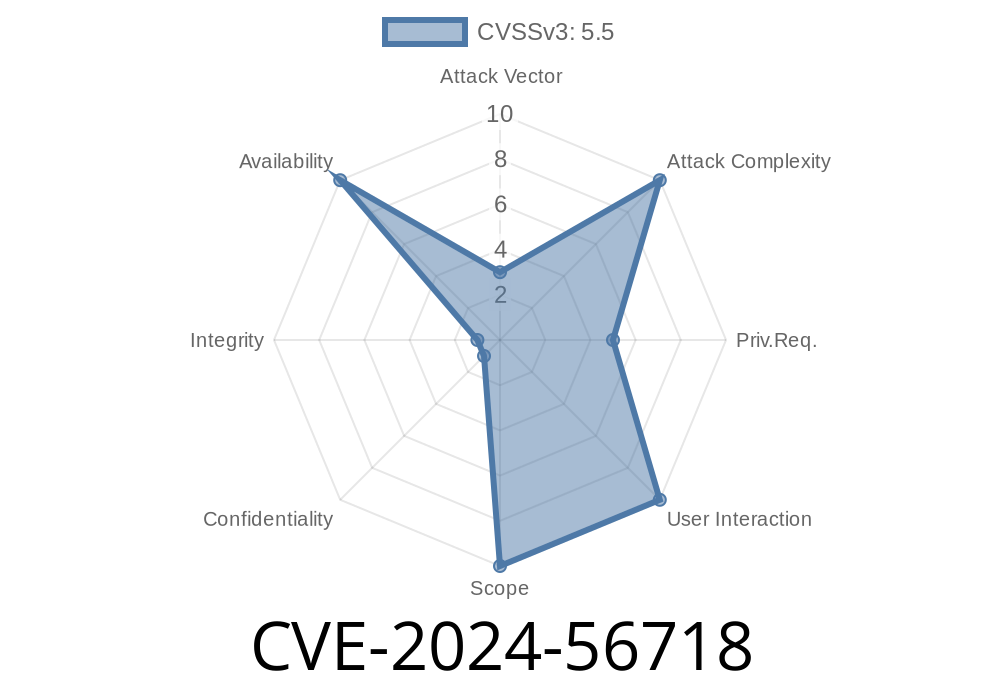

CVE entry:

NVD CVE-2024-56718 (nist.gov) (*pending update*)

Linux Kernel Security Mailing List:

Similar use-after-free exploits in the wild:

How Use-After-Free bugs are exploited in kernels

Conclusion and Advice

If you’re running a Linux system with SMC enabled, it’s essential to apply kernel updates containing this patch! This race condition is a textbook kernel bug with potentially severe impact.

Summary Table

| CVE | Affected Area | Impact | Exploitability | Fixed in |

|-----------------|------------------|-----------------------|----------------------|---------------------|

| CVE-2024-56718 | net/smc (kernel) | DoS, possible privesc | Local (race) | v6.10-rc6, mainline |

Timeline

Published on: 12/29/2024 09:15:07 UTC

Last modified on: 01/20/2025 06:26:48 UTC