Published: June 2024

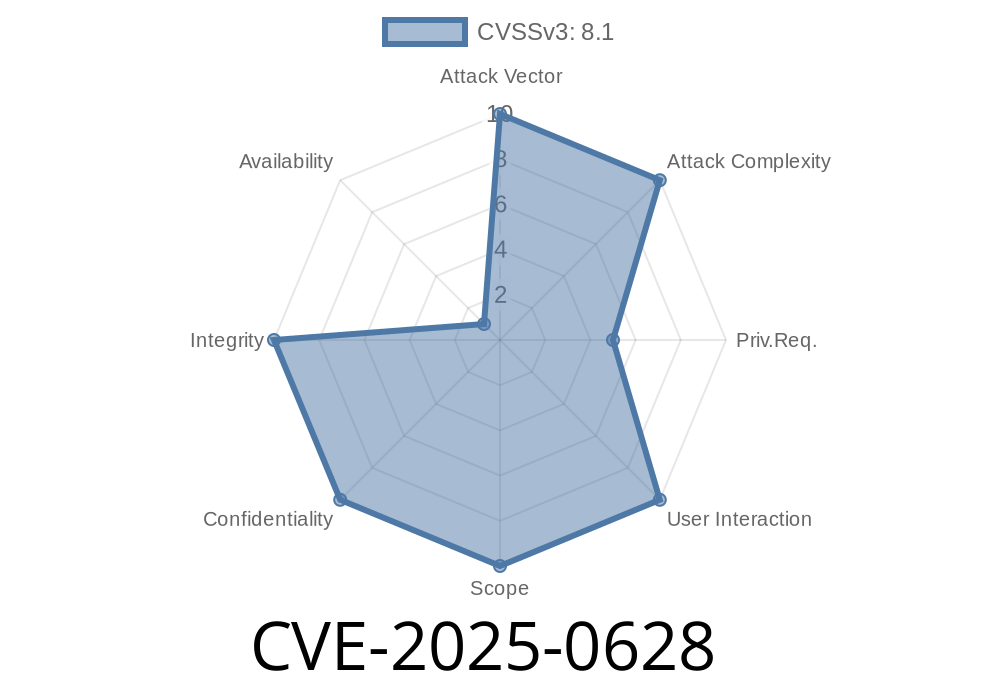

CVSS Score: 8.8 (High)

Summary:

A new high-impact vulnerability—CVE-2025-0628—has been found in the main-latest version of BerriAI/litellm. This flaw allows users with basic privileges to gain complete admin control, all due to a misplaced API key assignment. Here’s a deep-dive into how it works, how to spot it, and what you should do to fix or avoid it.

## What Is BerriAI/litellm?

BerriAI/litellm is an open-source tool that simplifies and manages interactions with various Large Language Models (LLMs). Think of it as a gateway between your company’s app and popular LLMs like GPT-3.5, Claude, or Llama-2. Some SaaS platforms use it as a backend layer.

Description

When a user with the role internal_user_viewer logs in, the application mistakenly gives them an API key that is way too powerful. Instead of a read-only key, users get an admin-level key. Anyone with this API key can access all admin functions, including:

- Listing all users (/users/list)

- Getting details on all users (/users/get_users)

Escalating their own account to an admin

This effectively means any internal viewer can act as a PROXY ADMIN.

Where’s the Problem?

The vulnerable section comes down to how API keys are generated and assigned after login for users with the internal_user_viewer role.

Here's a simplified code snippet that demonstrates the vulnerable logic (for illustration; real code may differ):

def login(user_role):

if user_role == "internal_user_viewer":

api_key = generate_api_key(privileges="admin") # BUG: Should be "viewer"

else:

api_key = generate_api_key(privileges=user_role)

return api_key

The mistake? Instead of generating an API key *matching* the user's real permissions ("viewer"), the logic always assigns privileges="admin" for internal viewers.

Use that key to send authenticated requests to admin-only endpoints, such as

- GET /users/list

- POST /users/add_user

- POST /users/make_admin

Exploit Example Using curl

# Suppose you have the admin-level API key as 1234abc-overpriv

curl -H "Authorization: Bearer 1234abc-overpriv" \

https://your-litellm-app.com/users/list

Or, even add yourself as a proxy admin

curl -X POST -H "Authorization: Bearer 1234abc-overpriv" \

-d '{"user":"attacker", "role":"proxy_admin"}' \

https://your-litellm-app.com/users/make_admin

Compromise apps and downstream services that rely on litellm for sensitive data or workflows

In a worst-case scenario, your entire LLM deployment could be compromised and even disabled by a previously harmless viewer.

Try using the key to access admin endpoints as shown above.

3. Audit the key issuance code—look for lines where all users, regardless of role, get admin keys.

- Modify API key issuance code so it's strictly tied to the authentic user’s role

def login(user_role):

api_key = generate_api_key(privileges=user_role) # NO HARD-CODE

return api_key

Rotate all existing API keys.

- Monitor logs for suspicious usage patterns (especially use of /users/* endpoints).

Official References

- BerriAI/litellm on GitHub

- Security Advisory on GitHub (pending)

- NVD entry for CVE-2025-0628 (pending)

Conclusion

CVE-2025-0628 is a textbook case of improper authorization, but with elevated risks thanks to overprivileged API keys. If you use BerriAI/litellm, patch your systems right away, issue new keys, and review your internal security policies.

Always ensure your API keys only allow what the user’s role says they should.

*Stay tuned for updates or patch releases, and check the official BerriAI/litellm repo for the latest advisories.*

Timeline

Published on: 03/20/2025 10:15:53 UTC