Summary:

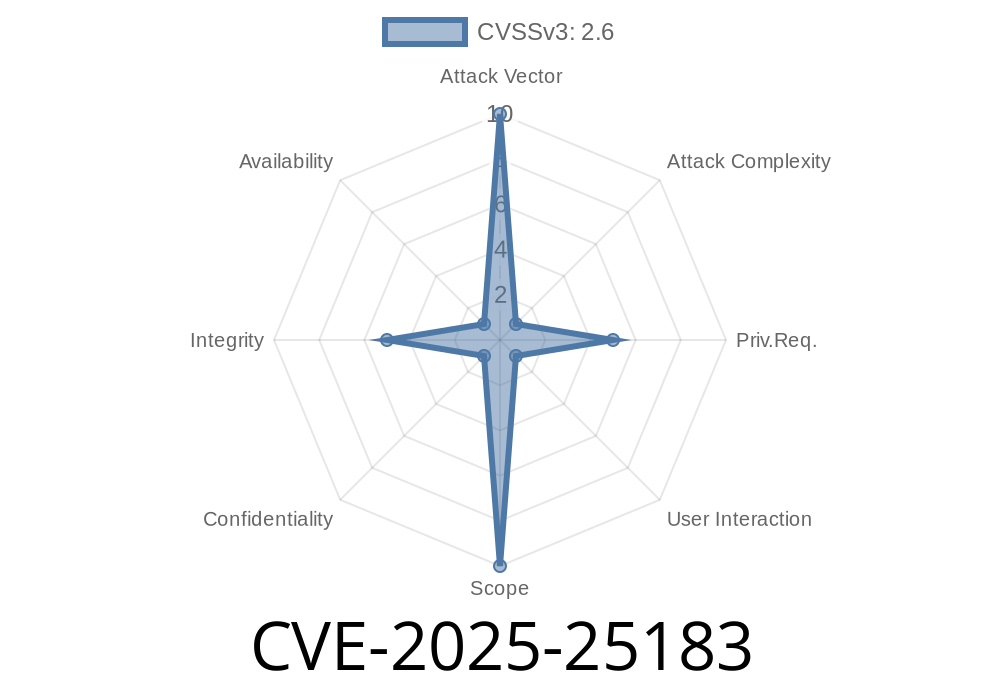

vLLM is a popular inference and serving engine often used to run large language models (LLMs) efficiently. It supports advanced features like prefix caching to make responses snappy — but a critical vulnerability, now tracked as CVE-2025-25183, demonstrates how maliciously crafted input can take advantage of Python’s predictable hash() behavior to trick the cache and interfere with AI responses.

In this post, we break down what happened, how the bug works, why Python changes play a part, and how real-world attackers could potentially mess with your deployment. We’ll also explain how to keep your LLM workloads safe.

What Is vLLM?

vLLM is a high-throughput and memory-efficient serving system for LLMs like GPT and LLaMA. By caching previous prompts and parts of responses (prefix caching), it speeds up repeated or similar requests.

What Goes Wrong?

vLLM’s prefix cache uses Python’s built-in hash() function to identify cache entries. As of Python 3.12, there’s a key change: hash(None) and some other hashes have become predictable, constant values. This makes it a lot easier for someone to *deliberately* trigger a hash collision between two unrelated prompts.

What Could an Attacker Do?

If an attacker knows a prompt that a system is using — say, a frequently-asked prompt at an AI-powered helpdesk — they could craft their own prompt that hashes to the same value. By submitting their own prompt first, they populate the cache. The next time the real user sends the legitimate prompt, the system will (incorrectly) reuse the attacker’s cached content, leading to strange or unintended behavior in responses.

There are *no* known workarounds. Users must upgrade to at least vLLM .7.2, which changes hash handling.

Here’s a simplified code snippet (similar to real code in vLLM) showing the vulnerable pattern

# vulnerable_cache.py (simplified example of what vLLM did)

cache = {}

def get_cache_key(prompt):

return hash(prompt)

def fetch_response(prompt):

key = get_cache_key(prompt)

if key in cache:

# Possible cache hit with wrong prompt!

return cache[key]

response = run_llm_inference(prompt)

cache[key] = response

return response

If two different prompts hash to the same value, they share the same cache slot.

Since hash(None) now always equals 5740354900026072187 in Python 3.12+, for example, an attacker could search for a prompt whose hash collides with a known target.

Example collision in Python 3.12+

# Python 3.12+ interactive example

print(hash(None)) # 5740354900026072187

# Let's find a string with same hash

for i in range(100000):

candidate = f"test{i}"

if hash(candidate) == hash(None):

print("Collision found:", candidate)

break

Once you find such a string, requests using "testXXXXX" and None will share the same cache entry!

Populate Cache:

Send the maliciously crafted prompt, causing the server to cache a bogus or attacker-controlled response.

Poisoned Cache:

When a real user sends the original, legitimate prompt, the system uses the attacker’s cached response, leading to wrong or manipulated outputs.

Why Python 3.12 Made It Worse

Prior to Python 3.12, hash() for certain built-in objects (like None) and even strings could differ between runs (randomized). But Python 3.12 made hash(None) a fixed value.

Suddenly, attackers don’t need local or insider access for repeated tries — they can just compute what they need in advance.

Solution

Upgrade!

The vLLM maintainers patched this bug in vLLM .7.2 (release notes). The new version uses safer, less collision-prone keys for the cache. There are no workarounds. You must upgrade.

- Official Advisory *(replace with actual GHSA ID if available)*

Preventing Similar Bugs

If you’re designing your own cache, never use Python’s built-in hash() for persistent storage or anything accessible across process boundaries or machine restarts. It’s only designed for fast, short-lived lookups, and its collisions can be abused when input is attacker-controlled or public.

Conclusion

CVE-2025-25183 is a textbook example of how deep details (like small language changes!) can lead to real, exploitable bugs in widely used tools like vLLM. If you’re running vLLM anywhere, especially with untrusted or public prompts, update to .7.2 or newer *now*.

Links and References

- vLLM Release .7.2

- Python 3.12 hash(None) Change

- Official CVE Advisory (TBA)

Timeline

Published on: 02/07/2025 20:15:34 UTC