CVE-2025-25188 - Hickory DNSSEC Trust Bypass – How a Simple Verification Flaw Exposed DNS Clients to Spoofing

*[EXCLUSIVE: Deep-dive analysis.]*

Hickory DNS is a popular DNS library, client, server, and resolver written in Rust, favored for its speed and modern code. But

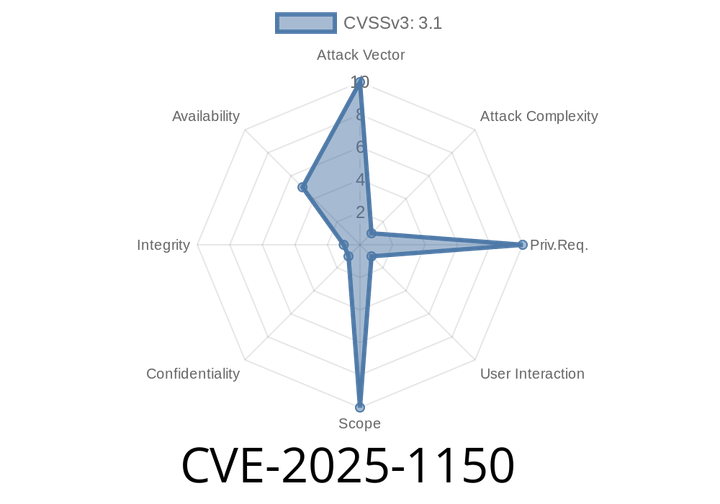

CVE-2025-1150: Memory Leak Vulnerability Found in GNU Binutils 2.43 Causing BFD_Malloc Issues

A memory leak vulnerability (CVE-2025-1150) has recently been discovered in the GNU Binutils 2.43. This vulnerability has been declared as problematic and closely tied