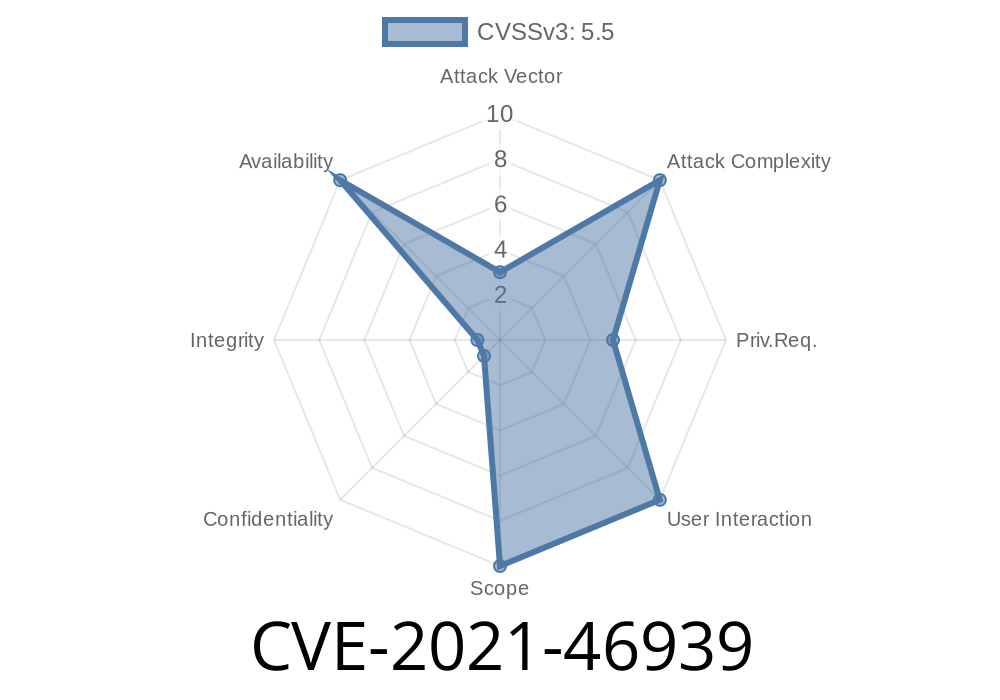

A subtle bug in the Linux kernel’s tracing infrastructure caused mysterious system hangs during suspend/resume cycles. This issue—CVE-2021-46939—was traced to the trace_clock_global() function, found within Linux’s powerful tracing subsystem. In this post, I’ll delve into how this happened, share original references, and show part of the code involved. We'll also see why this bug was tricky, and how the fix made Linux more robust.

What is trace_clock_global() and Why Does it Matter?

The Linux kernel’s tracing system is used by developers and sysadmins to monitor internal kernel events for debugging and performance analysis. One key component is the "ring buffer," a fast, lock-protected data structure for events.

The trace_clock_global() function is used to provide stable timestamps for those events. To maintain accurate time order, it keeps track of a "previous time" and tries to ensure that time never goes backwards, even if two CPUs update timestamps concurrently. The original approach used a spinlock to update prev_time.

With recent changes to recursion detection in the ring buffer, a nasty situation emerged

- Suppose CPU A enters ring_buffer_lock_reserve(), calls trace_clock_global(), and tries to take a spinlock to update prev_time.

The same spinlock is tried again in the same thread before being released.

- This sequence causes a *deadlock*. The system locks up, especially visible during CPU suspend/resume tests.

This is visible in this kernel backtrace (truncated for clarity)

Call Trace:

trace_clock_global+x91/xa

__rb_reserve_next+x237/x460

ring_buffer_lock_reserve+x12a/x3f

...

RIP: 001:native_queued_spin_lock_slowpath+x69/x200

Code Flow (Simplified)

ring_buffer_lock_reserve() {

trace_clock_global() {

arch_spin_lock() {

// grabbed lock

...some tracing triggers another...

ring_buffer_lock_reserve() {

trace_clock_global() {

arch_spin_lock() {

// try to grab lock again: DEADLOCK!

Why This Was a Problem

In kernel tracing, *nothing* should block. Even tiny lockups or missed events can destabilize the system, cause strange freezes, or silently eat events. Deadlocks in low-level tracing are very bad—they may not be spotted by regular users until weird failures start showing up.

The code uses a trylock instead of a strict spinlock.

- If the lock cannot be immediately taken, prev_time isn't updated this time—it's picked up on the next pass.

- Since tracing is most interested in roughly monotonic time, it's acceptable for multiple CPUs to race briefly; the error margin is negligible.

Key part of the new approach (pseudo-C)

if (spin_trylock(&trace_clock_lock)) {

/* Update prev_time since we got the lock */

if (now > prev_time)

prev_time = now;

spin_unlock(&trace_clock_lock);

} else {

/* Could not get lock; will try next time */

}

This small but critical change means there's no blocking path inside tracing. Even if tracing recurses or overlaps, it won't ever deadlock the machine.

Upstream Commit and Code Fix:

kernel/git/torvalds/linux.git - ftrace: Restructure trace_clock_global to never block

Kernel Bugzilla Thread:

https://bugzilla.kernel.org/show_bug.cgi?id=212761

CVE Listing:

Exploit Details

This bug isn’t a classic security exploit; it’s a stability issue. A person with access (or a crafted script) that triggers recursive tracing, especially during power state changes, can cause the system to freeze. That can be a denial-of-service against the system, and in cloud/server settings, potentially disrupt workloads.

Enable function graph tracing.

2. Trigger events that cause recursive trace calls while entering/exiting sleep/suspend states.

System may hang due to the deadlock in the tracing spinlock.

Actual remote exploitation is unlikely; this is more about local privilege/misconfiguration, but it underscores how even small locking bugs can have big impact in the kernel.

Conclusion

CVE-2021-46939 is a textbook case of how rare race conditions and recursion in kernel tracing can lead to system lockups—in this case, a trace clock lock that could deadlock the kernel during suspend/resume sequences. The fix was smart and pragmatic: use a trylock and accept small timestamp races in favor of stability.

If you run custom kernels or enable advanced tracing, make sure you backport or use at least kernel version with the fix above. For more details, consult the original Bugzilla entry.

Timeline

Published on: 02/27/2024 19:04:05 UTC

Last modified on: 04/10/2024 19:49:03 UTC