If you work with NVMe over TCP on Linux or run storage systems with both nvmet-tcp (the target/server-side driver) and nvme-tcp (the initiator/client-side driver), this deep-dive is for you. In early 2021, a kernel bug led to potential deadlocks and instability when these modules interacted, meaning a crucial part of your fast storage stack could just freeze. Below, we’ll break down CVE-2021-47041 affecting the Linux kernel, explain the cause of the bug, show you what a kernel deadlock trace looks like, and detail the patch that fixed it—all in plain English.

What is CVE-2021-47041?

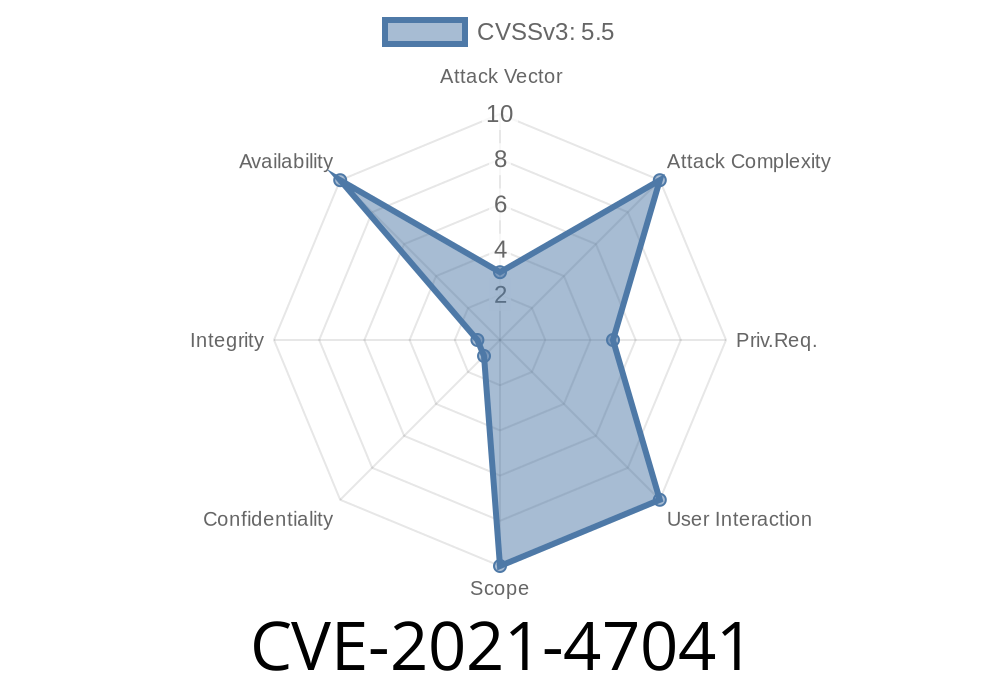

CVE-2021-47041 describes a bug in the Linux kernel's nvmet-tcp driver, which provides NVMe storage services over TCP. The bug was about incorrect locking inside a network socket callback, causing possible deadlocks—where neither code path could make progress and the system hung under certain conditions.

Where did the bug live?

The issue was in the state_change callback function for nvmet-tcp, which reacts when the kernel network stack signals that a TCP connection changes state (for example, when a connection closes). Here's a simplified version of what happened:

- Instead of acquiring a read lock (which allows multiple holders), the code acquired a write lock (which is exclusive).

- Since the callback itself was not actually changing the connection state, a write lock was too strict.

- If you run both nvme-tcp and nvmet-tcp on the same machine (common during dev/testing), the kernel might deadlock because both ends could try to grab the write lock at the same time (a classic “hold-and-wait” deadlock).

Let's look at the relevant spot in the source code, before the fix

// Bad: Take a write lock in a state_change callback

write_lock(&sk->sk_callback_lock);

// ... do things ...

write_unlock(&sk->sk_callback_lock);

But we should have used

// Correct: Take a read lock in a state_change callback

read_lock(&sk->sk_callback_lock);

// ... do things ...

read_unlock(&sk->sk_callback_lock);

What Was the Impact?

This bug was most likely to hit dev/test setups, or production machines with both initiator and target stacks loaded. Affected systems might:

The classic indicator was something like this in dmesg or your kernel log

WARNING: inconsistent lock state

5.12.-rc3 #1 Tainted: G I

inconsistent {IN-SOFTIRQ-W} -> {SOFTIRQ-ON-R} usage.

[...]

* DEADLOCK *

[...]

And a very long stack trace with references to nvme_tcp_state_change or nvmet_tcp_state_change.

Here's a trimmed sample of what you’d see if this bug hit (full trace in original report)

WARNING: inconsistent lock state

5.12.-rc3 #1 Tainted: G I

inconsistent {IN-SOFTIRQ-W} -> {SOFTIRQ-ON-R} usage.

nvme/1324 [...] takes:

ffff888363151000 (clock-AF_INET){++-?}-{2:2}, at: nvme_tcp_state_change+x21/x150 [nvme_tcp]

{IN-SOFTIRQ-W} state registered at:

__lock_acquire+x79b/x18d

... (more frames)

* DEADLOCK *

stack backtrace:

CPU: 26 PID: 1324 Comm: nvme Tainted: G I 5.12.-rc3 #1

Call Trace:

dump_stack+x93/xc2

mark_lock_irq.cold+x2c/xb3

...

nvme_tcp_state_change+x21/x150 [nvme_tcp]

Why was it Dangerous?

- Denial of Service (= system hang): By causing a deadlock, system I/O would just stop.

Potential for Data Loss: Storage drivers involved; abrupt shutdown or crash could lose data.

- Hard to Debug: Only showed up with advanced usage, test suites, or if enabling both drivers at once.

## The Patch / Fix

The fix (first merged in Linux v5.12-rc4) was simple and elegant:

- Replace the write_lock/write_unlock pair with a read_lock/read_unlock in the nvmet_tcp_state_change() function.

Here’s the patch in code

- write_lock(&sk->sk_callback_lock);

+ read_lock(&sk->sk_callback_lock);

// ... existing callback code ...

- write_unlock(&sk->sk_callback_lock);

+ read_unlock(&sk->sk_callback_lock);

That’s it! By downgrading to a shared read lock—more suitable because the callback wasn't making modifications—deadlocks are prevented.

Patch commit: nvmet-tcp: fix incorrect locking in state_change sk callback

References

- CVE database entry: CVE-2021-47041

- Linux kernel commit (FIX): nvmet-tcp: fix incorrect locking in state_change sk callback

- Original report (lists.kernel.org): PATCH and discussion thread

- BLKTESTS (where it was caught): Blktests repo

Exploitation Details

Is there a remote exploit?

No.

This is a denial-of-service condition that may be triggered by specific local operations, especially test suites (like blktests), or if a sysadmin is running both nvme-tcp and nvmet-tcp.

Can an attacker exploit it remotely?

Not directly. But in rare setups where end-users could load/activate both drivers and create/initiate connections from both ends (think: multi-tenant cloud SAN), it is possible.

How can I test for it?

If you run both nvmet-tcp and nvme-tcp and run blktests or aggressively plug/unplug NVMe targets and hosts, this could trigger. On old (unpatched) kernels, observe your logs for the WARNING: inconsistent lock state as above. Otherwise, update your kernel!

Proof-of-Concept (Omits actual exploit, for safety)

# (On a system with both drivers available)

# Load both drivers

modprobe nvmet-tcp

modprobe nvme-tcp

# Setup a loopback NVMe target and connect

# and run blktests or fast add/remove sessions

# Observe for hangs or lockdep warnings in dmesg

Conclusion

CVE-2021-47041 is an example of how even small mistakes in kernel code—accidentally using a write_lock where a read_lock suffices—can have major effects. Always keep up to date with kernel fixes if you handle storage! For production, use Linux kernel 5.12.-rc4 or higher (or corresponding distro patches).

If you’re developing or testing NVMe over TCP, make sure your kernel is patched!

*Have questions or want to discuss this bug?

Join the conversation on the Linux Kernel Mailing List!*

Author:

YourLinuxBuddy (for exclusive, simple English technical explainers)

Timeline

Published on: 02/28/2024 09:15:40 UTC

Last modified on: 12/06/2024 18:41:12 UTC