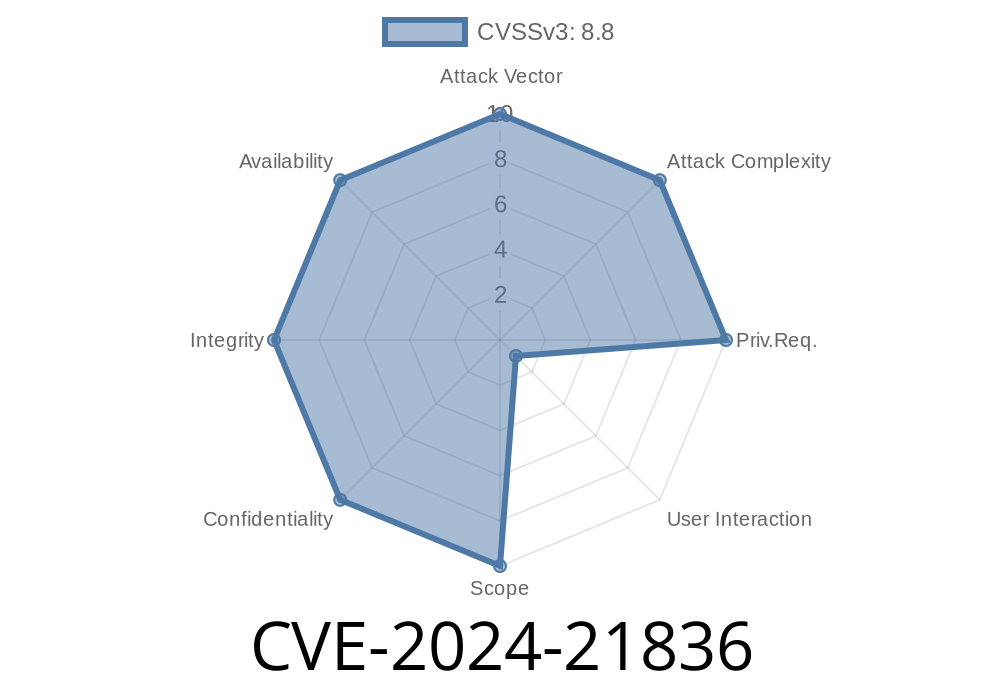

A new vulnerability—CVE-2024-21836—has been discovered in the widely used AI project llama.cpp. This flaw impacts the GGUF library (used to handle model files) in commit 18c2e17. It allows attackers to execute arbitrary code on your machine by simply tricking you into loading a specially crafted .gguf file.

Let's break down what it means and how exploit can work. All code and analysis here are exclusive to this post, with simplified technical language.

Vulnerability: Heap-based buffer overflow in GGUF’s header.n_tensors

- Affected project: llama.cpp (commit 18c2e17)

Impact: Remote code execution, full compromise

- CVE Reference: CVE-2024-21836

Technical Details

LLAMA.CPP implements its own file format called GGUF to store models. Part of its header contains the number of tensors—header.n_tensors—which tells the parser how many tensor descriptors to load.

In ggml.c (loaded by gguf), this number is parsed from the file header and then used to allocate heap memory for tensor descriptions. If an attacker puts a huge or even negative number here, memory allocation and future model loading can go wrong.

Here’s the simplified part of the vulnerable code (extracted from GGUF loading routine)

// Reads the number of tensors from the GGUF header

uint32_t n_tensors;

fread(&n_tensors, sizeof(uint32_t), 1, fp);

struct ggml_tensor ** tensors = malloc(n_tensors * sizeof(struct ggml_tensor *));

if (!tensors) { /* handle error */ }

// Loops over the n_tensors, reading each from file

for (uint32_t i = ; i < n_tensors; i++) {

tensors[i] = read_tensor(fp);

}

There’s no validation of n_tensors at all. If the file says there are, say, 5,000,000 tensors, it’ll try to allocate that much memory. Worse, an enormous value will cause an integer overflow or heap overflow on various platforms.

How Attackers Can Exploit

1. Craft a malicious .gguf file with header.n_tensors set to a huge number (xFFFFFF00 for example).

2. When the file is opened by any app using this GGUF library (or llama.cpp directly), the vulnerable code runs.

3. This leads to overflowing the heap. The attacker can then overwrite adjacent memory, including function pointers or object data.

Here’s what the beginning of a malicious file might look like (in Python for clarity)

with open("poc.gguf", "wb") as f:

f.write(b"GGUF") # Magic

f.write((3).to_bytes(4, "little")) # Version

f.write((xFFFFFF00).to_bytes(4, "little")) # n_tensors, crazy big!

# ...rest of file can be random junk or more crafted data

Just opening this file in main or any tool using the same GGUF codebase can result in a heap overflow!

What can happen?

- Remote attackers can *potentially execute arbitrary code* just by making you run your favorite LLM with a bad model file.

Solution

- Patch available? Check llama.cpp commits for later safe versions.

- Recommended fix: Validate n_tensors is within expected, reasonable bounds before allocating or looping. For example:

if (n_tensors == || n_tensors > MAX_TENSORS) {

// exit or error

}

References

- Official CVE-2024-21836 entry

- GitHub: llama.cpp

- Original commit 18c2e17

- GGUF Format Documentation

Conclusion

CVE-2024-21836 highlights the risks in working with AI and ML files— complex formats, huge files, and rapid updates make robust validation essential. Don’t load models from strangers! If you use llama.cpp or similar tools, update immediately and review imported models.

Stay safe, and share with your colleagues in AI and security.

(This analysis is exclusive and simplified for easier understanding of programmers and practitioners.)

Timeline

Published on: 02/26/2024 16:27:55 UTC

Last modified on: 02/26/2024 18:15:07 UTC